Copyright 2024 Mike Bland, licensed under the Creative Commons Attribution 4.0 International License (CC BY 4.0).

Abstract

This is a fairly complete survey of core automated testing concepts, using the Test Pyramid as the unifying metaphor. It makes these concepts accessible to programmers who have had little to no practical experience with writing (good) tests. If you’re already familiar with Test-Driven Development, or writing automated tests in general, this may reinforce your existing principles and possibly deepen them further. The example system demonstrates the Test Pyramid strategy and incorporates Roy Osherove’s String Calculator kata as a hands-on Red-Green-Refactor cycle exercise.

Slides and example repository

- The Test Pyramid in Action Keynote presentation - requires iCloud, for now

- The Test Pyramid in Action PDF - stored in Google Drive; doesn’t contain presenter’s notes

- The Test Pyramid in Action PowerPoint presentation - stored in Google Drive; looks mostly like the original Keynote presentation, modulo minor conversion losses

- Tomcat Servlet Testing Example (TSTE) - functionally complete; in the process of making minor improvements and adding more documentation

Table of contents

- Introduction

- Agenda

- The Test Pyramid and Key Testing Concepts

- Overview of Example Architecture and Technology

- Test-Driven Development with the String Calculator Kata

- Really Tying the Room Together

- Footnotes

Introduction

I’m Mike Bland, and I’m going to talk about writing different “sizes” of automated tests to validate different properties of a software system. This strategy is commonly described using the Test Pyramid model, and we’ll see what this looks like using a complete web application as an example.

About me

mike-bland.com, github.com/mbland

Here’s a little bit of my background to illustrate how I came to care about this so much.

-

Taught myself unit testing at Northrop Grumman

I “accidentally” discovered unit testing while working on shipboard navigation software at Northrop Grumman Mission Systems. -

Led the Testing Grouplet at Google

I became one of the leaders of the Testing Grouplet, a volunteer group that made automated testing an indispensable part of Google’s software development culture. -

Led the Quality Culture Initiative at Apple

For four years, I was the original leader of the Quality Culture Initiative, a similar volunteer group at Apple that continues to go strong today. -

Wrote "Making Software Quality Visible"

Recently I produced an extensive presentation called Making Software Quality Visible, a distillation of my career experience in coding, testing, and driving adoption.

Agenda

Today’s talk is a further distillation to introduce you to some of the core concepts, practices, and infrastructure supporting good automated testing practices.

-

The Test Pyramid and Key Testing Concepts

I’ll give a high-level overview of the Test Pyramid model and some of the key concepts supporting it that we’ll see in the example. -

Overview of Example Architecture and Technology

We’ll walk through the structure of the example system briefly and I’ll list the tools involved and call out a couple of key features. -

Test-Driven Development with the String Calculator Kata

We’ll cover the very basics of Test-Driven Development with a brief demo and a brief hands-on exercise to give you a feel for the process. -

Really Tying the Room Together

Then we’ll end by discussing some of the goals and benefits of TDD and the Test Pyramid, and some extra guidance on effective automated testing.

If you have any questions or comments, feel free to raise them at any time. If I don’t have time to respond immediately, we can save the topic for discussion at the end or afterwards.

The Test Pyramid and Key Testing Concepts

Let’s start reviewing some of the fundamental concepts you’re going to see in action shortly. These are essential to understanding the example project and the testing demo and exercise.

What is automated testing?

Would anyone like to define what automated testing is?

[ WAIT FOR RESPONSES ]

This is how I like to define it. It’s:

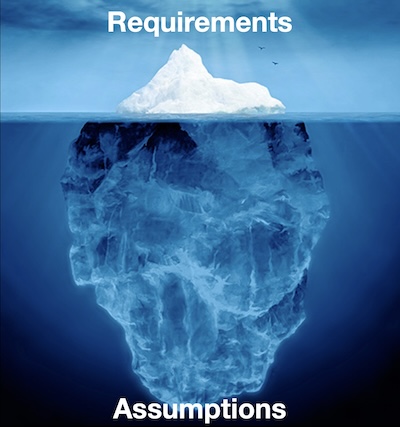

The practice of writing programs to verify that our code and systems conform to expectations—i.e., that they fulfill requirements and make no incorrect assumptions.

Note that I didn’t say “conform to requirements.”

Base image: Iceberg (from a site that seems no longer to exist)

Most of us usually validate that our code meets requirements, but often fall short of challenging the assumptions also embodied within our code.

Automated tests are reusable

The upfront cost to writing them pays off quickly and over time

The killer feature of well-written automated tests are that they’re automated, meaning they can run over and over all the time. You don’t have to make time to test things manually, or wait for someone else to do so. If you need to run the tests, run the tests, or set them up to run automatically.

Of course, you do need to make the time to learn to write good tests, and to actually write them. As with any new practice, there is a learning curve and investment of time and effort to get started. However, they payoff usually comes fairly quickly, and good tests actually keep paying off over time.

Before I reveal a few properties of automated testing that enable this payoff, does anyone want to guess what they might be?

[ WAIT FOR RESPONSES ]

-

They’re an essential ingredient of continuous integration pipelines

Without automated tests, code submitted to your CI pipeline may build, and even run, but you may miss tons of preventable bugs creeping in. Then you’re stuck figuring out which change introduced a problem, and when, and why, instead of catching it immediately. -

They take the burden off of human reviewers, QA, users to catch everything

The whole point of continuous integration and automated testing is to keep humans from getting burned out or becoming scared to change anything. -

When written well, they’re fast, reliable, and pinpoint specific problems

Good tests not only enable humans to avoid toil and fear when they consistently pass, but also when they fail for good reason. They’re like production alerts pointing us at a specific problem from a specific code change. -

They help increase velocity, reduce risk, and enable frequent merges by ensuring correctness automatically

Because good tests run fast, reliably, and pinpoint specific problems, that means we humans can do more interesting work at a faster pace. We don’t fear change, and can make more changes faster when we trust our tests. -

You spend more time delivering value, less time firefighting, debugging, fixing

The ultimate result is that we feel more productive and more satisfied in our work, because we aren’t chasing down preventable problems all the time. This also makes us more valuable to the company, which should be good for our careers.

Questions or comments?

[ WAIT FOR RESPONSES ]

All this begs the question, though, of what makes for a “good” automated test.

The Test Pyramid

The Test Pyramid model can help us do a better job of validating our expectations by helping us write more effective automated tests.

Has anyone heard of the Test Pyramid before? If so, how would you describe it?

[ WAIT FOR RESPONSES ]

I like to describe it as:

A balance of tests of different sizes for different purposes.

It’s not a perfect model—no model is—but it’s an effective tool for starting a productive conversation about testing strategy.

The same information as above, but in a scrollable HTML table:

| Size | Scope | Ownership | Code visibility |

Dependencies | Control/ Reliability/ Independence | Resource usage/ Maint. cost | Speed/ Feedback loop | Confidence |

|---|---|---|---|---|---|---|---|---|

| Large (System, E2E) |

Entire system |

QA, some developers |

Details not visible | All | Low | High | Slow | Entire system |

| Medium (Integration) |

Components, services | Developers, some QA | Some details visible | As few as possible | Medium | Medium | Faster | Contract between components |

| Small (Unit) |

Functions, classes | Developers | All details visible | Few to none | High | Low | Fastest | Low level details, individual changes |

It helps us understand how different kinds of tests give us confidence in different levels and properties of the system. It can also help us break the habit of writing large, expensive, flaky tests by default.

-

Small tests are unit tests that validate only a few functions or classes at a time with very few dependencies, if any. They often use test doubles in place of production dependencies to control the environment, making the tests very fast, independent, reliable, and cheap to maintain. Their tight feedback loop enables developers to detect and repair problems very quickly that would be more difficult and expensive to detect with larger tests. They can also be run in local and virtualized environments and can be parallelized.

-

Medium tests are integration tests that validate contracts and interactions with external dependencies or larger internal components of the system. While not as fast or cheap as small tests, by focusing on only a few dependencies, developers or QA can still run them somewhat frequently. They detect specific integration problems and unexpected external changes that small tests can’t, and can do so more quickly and cheaply than large system tests. Paired with good internal design, these tests can ensure that test doubles used in small tests remain faithful to production behavior.

-

Large tests are full, end to end system tests, often driven through user interface automation or a REST API. They’re the slowest and most expensive tests to write, run, and maintain, and can be notoriously unreliable. For these reasons, writing large tests by default for everything is especially problematic. However, when well designed and balanced with smaller tests, they cover important use cases and user experience factors that aren’t covered by the smaller tests.

Thoughtful, balanced strategy == Reliability, efficiency

Each test size validates different properties that would be difficult or impossible to validate using other kinds of tests. Adopting a balanced testing strategy that incorporates tests of all sizes enables more reliable and efficient development and testing—and higher software quality, inside and out.

Any questions before we move on?

[ WAIT FOR RESPONSES ]

Inverted Test Pyramid

Thinking about test sizes helps avoid writing too many larger tests and not enough smaller tests, a common antipattern known as the Inverted Test Pyramid.

This leads to a number of common problems:

-

Tests tend to be larger, slower, less reliable

The tests are slower and less reliable than they could be compared to relying more on smaller tests. -

Broad scope makes failures difficult to diagnose

Because large tests execute so much code, it might not be easy to tell what caused a failure. -

Greater context switching cost to diagnose/repair failure

That means developers have to interrupt their current work to spend significant time and effort diagnosing and fixing any failures. -

Many new changes aren’t specifically tested because “time”

Since most of the tests are large and slow, this incentivizes developers to possibly skip writing or running them because they “don’t have time.” -

People ignore entire signal due to flakiness…

Worst of all, since large tests are more prone to be flaky,1 people will begin to ignore test failures in general. They won’t believe their changes cause any failures, since the tests were failing before—they might even be flagged as “known failures.”2 -

…fostering the Normalization of Deviance

This cultivates a phenomenon called the “Normalization of Deviance.”

Normalization of Deviance

Coined by Diane Vaughan in The Challenger Launch Decision

Diane Vaughan introduced this term in her book about the Space Shuttle Challenger explosion in January 1986.3 My paraphrased version of the definition is:

A gradual lowering of standards that becomes accepted, and even defended, as the cultural norm.

The explosion of the Space Shuttle Challenger shortly after takeoff on January

28, 1986 exposed the potentially deadly consequences of common organizational

failures.

Image from https://commons.wikimedia.org/wiki/File:Challenger_explosion.jpg.

In the public domain from NASA.

She derived this concept from the fact that nearly everyone ignored performance deviations in the rocket booster O-rings from 14 of the previous 17 missions. Those who did express concern were pressured and overruled, since a launch is very expensive and nothing bad had yet happened.

You may not work on Space Shuttle software, but the same principle applies. A culture of ignoring problems often leads to much bigger problems.

Inverted Test Pyramid

Causes

Let’s go over some of the reasons why the Inverted Test Pyramid and Normalization of Deviance often take shape in an organization.

-

Features prioritized over internal quality/tech debt

People are often pressured to continue working on new features that are “good enough” instead of reducing technical debt. This may be especially true for organizations that set aggressive deadlines and/or demand frequent live demonstrations.4 -

“Testing like a user would” is more important

Again, if “testing like a user would” is valued more than other kinds of testing, then most tests will be large and user interface-driven. -

Reliance on more tools, QA, or infrastructure (Arms Race)

This also tends to instill the mindset that the testing strategy isn’t a problem, but that we always need more tools, infrastructure, or QA headcount. I call this the “Arms Race” mindset. -

Landing more, larger changes at once because “time”

Because the existing development and testing process is slow and inefficient, individuals try to optimize their productivity by integrating large changes at once. These changes are unlikely to receive either sufficient testing or sufficient code review, increasing the risk of bugs slipping through. It also increases the chance of large test failures that aren’t understood. The team is inclined to tolerate these failures, because there isn’t “time” to go back and redo the change the right way. -

Lack of exposure to good examples or effective advocates

As mentioned before, many people haven’t actually witnessed or experienced good testing practices before, and no one is advocating for them. This instills the belief that the current strategy and practices are the best we can come up with. -

We tend to focus on what we directly control—and what management cares about! (Groupthink)

In such high stress situations, it’s human nature to focus on doing what seems directly within our control in order to cope. Alternatively, we tend to prioritize what our management cares about, since they have leverage over our livelihood and career development. It’s hard to break out of a bad situation when feeling cornered—and too easy to succumb to Groupthink without realizing it.

The point is that merely automating our tests isn’t enough; we have to be thoughtful about automating them the right way. The sooner we adopt good coding and testing habits, the greater our chances of avoiding these negative outcomes.

Any questions?

[ WAIT FOR RESPONSES ]

Key Concepts

Principles, practices, and shared language

Even though we now know that not one test size fits all, there are a few key concepts informing effective tests of all sizes.

-

Tests should be designed to fail: naming and organization can clarify intent and cause of failure

The goal of testing isn’t to make sure tests always pass no matter what. The goal is to write tests that let us know, reliably and accurately, when our expectations of the code’s behavior differ from reality. -

Use the Arrange-Act-Assert pattern (a.k.a. Given-When-Then) to keep tests focused and readable

Use the Arrange-Act-Assert (or Given-When-Then) pattern to separate the setup, execution, and validation phases of each test case. This helps ensure each test case remains understandable by keeping each one focused on a specific behavior. -

Extract helpers to reduce complicated setup, execution, or assertions

Extract helper methods, classes, or even subsystems to ensure test cases remain brief and read clearly. This is especially important for operations that may be shared across several tests, but can be valuable when writing even a single test case sometimes. -

Interfaces/seams enable composition, dependency breaking w/ test doubles

We often have to work with legacy code bases with few tests, if any. So we also need to make safe changes to existing code that enable us to begin improving code quality and adding tests. Michael Feathers’s Working Effectively with Legacy Code is the seminal tome on this subject, showing how to gently break dependencies to introduce seams. “Seams” are points at which we introduce abstract interfaces that enable test doubles to stand in for our dependencies, making tests faster and more reliable.

Speaking of interfaces, Scott Meyers, of Effective C++ fame, gave perhaps the best design advice of all for writing testable, maintainable, understandable code in general:

“Make interfaces easy to use correctly and hard to use incorrectly.”

To propose a slight update to make it more concrete:

“Make interfaces easy to use correctly and hard to use incorrectly—like an electrical outlet.”

—With apologies to Scott Meyers, The Most Important Design Guideline?

I took this picture of an electrical outlet myself, because I couldn’t find a

good free one.

Of course, it’s not impossible to misuse an electrical outlet, but it’s a common, wildly successful example that people use correctly most of the time. Making software that easy to use or change correctly and as hard to do so incorrectly may not always be possible—but we can always try.

Dependency Injection

Interfaces, or seams, are what enable us to apply the “dependency injection” technique to keep our code isolated from its production dependencies. This makes the code easier to test, of course, but it also makes the design of the system more understandable and maintainable in general.

All “dependency injection” means is:

Receiving references to collaborator interfaces as function parameters instead of creating or accessing collaborator implementations directly

So here’s a small example of code that doesn’t use dependency injection:

Before:

public Consumer() {

this.foo = new ProdFoo();

this.bar = ProdBar.instance();

}

Consumer creates one collaborator, and access a singleton instance directly.

This means to test Consumer, we also have to manage the setup and execution of

ProdFoo and ProdBar. These dependencies can be complex, slow, and difficult

to control, making testing Consumer painful and unreliable.

This is how the code looks after updating it to inject these dependencies:

After:

public Consumer(FooProducer f, BarProducer b) {

this.foo = foo;

this.bar = bar;

}

We no longer create ProdFoo or access ProdBar directly, but we work with

their abstract interfaces instead. Now our tests can use lightweight test

doubles to exercise Consumer in isolation, yet easily configure Consumer

with its production dependencies at runtime.

Test Doubles

Does anyone already know what I mean by the term “test doubles?”

[ WAIT FOR RESPONSES ]

Test doubles are…

Lightweight, controllable objects replacing production dependencies

I always love using my favorite concrete analogy here: practicing alone through a practice amp before plugging into a wall of Marshalls onstage. You still have to rehearse and do a soundcheck with the band, but you need to get your own chops into shape first.

Like test doubles, practice amps make it faster, easier, and less nerve-racking

to check your work before going into production.

This image was derived from:

Marshall MS-2 Micro Amp (direct link);

Reverb: A History Of Marshall Amps: The Early Years (direct

link).

Because test doubles are so lightweight and controllable, they enable us to write faster, more reliable, more thorough tests for small components in isolation. Another major benefit is that they enable separate individuals or teams to work on separate components in parallel, provided stable component interface contracts. We’ll see a sample of how this works when we walk through the example project.

Dependency Injection (continued)

Extract a pure abstract interface from concrete dependencies, then have the dependency—and test doubles—implement the interface.

This is what enabling dependency injection looks like from the point of view of a production dependency that you control.

Before:

class ProdFoo {

public ProdFoo() {

// initialize prod dependencies

}

}

We extract a pure abstract interface based on the existing ProdFoo interface

and update ProdFoo to implement that interface explicitly.

After:

class ProdFoo implements Foo {

public ProdFoo() {

// initialize prod dependencies

}

}

That also sets us up for our next step, implementing a test double to stand in for the production dependency:

class TestFoo implements Foo {

public TestFoo() {

// initialize test dependencies

}

}

Usually this is pretty easy, but there are circumstances where doing this in legacy code may not prove so straightforward. The good news is that Michael Feathers’s book provides techniques for getting there one step at a time, but that’s for another presentation.

Dependency Injection with Internal API

For external dependencies, use an Internal API wrapper/adapter/proxy for testability and to limit exposure to changes beyond your control.

Before:

class ExternalFoo {

public ExternalFoo() {

// initialize prod dependencies

}

}

Dependency injection also enables us to reduce our system’s exposure to external dependencies by wrapping them with our own “internal API.”

After:

class FooWrapper implements Foo {

private ExternalFoo foo;

public FooWrapper(ExternalFoo f) {

this.foo = f;

}

}

This makes it easier to write isolated integration tests against the dependency, called “contract” or “collaboration tests.” At the same time, it becomes easier to test the rest of our system, while using an API that better matches our application domain.

It also helps avoid version or vendor lock-in. If something in the dependency changes, or a major upgrade comes out, you generally only have to update a single class, not your whole system. Trying or switching to a different implementation, such as moving from MySQL to Postgres, becomes much easier.

Dependency Injection with Weld

You shouldn’t need a framework, but when in Rome…

For some reason, the Java community thought it was a good idea to develop

dependency injection frameworks instead of wiring up dependencies directly from

main(). In particular, the Servlet API insists on instantiating Servlets with

a zero-argument constructor. So instead of constructor injection, you have two

options: create an injectable parent class, and derive a subclass with

production dependencies; or use a framework.

Normally I’d avoid DI frameworks, but given their prevalence, I’ve used

Weld, the standard Java reference implementation, for this example. In a lot

of cases, you can still use constructor injection by adding the @Inject

annotation to the constructor.

Preferred:

class Servlet extends HttpServlet {

@Inject

public Servlet(Calc calc) {

this.calculator = calc;

}

Weld will then find the right implementation at runtime, based on annotations added to your production classes and other configuration magic.

However, when it comes to servlets, which are instantiated using zero-argument constructors this isn’t an option, so you have to use private field injection.

Last resort (ick! but if necessary):

class Servlet extends HttpServlet {

@Inject private Calc calculator;

}

At runtime, the container—Tomcat in our case—will instantiate the Servlet, and then allow Weld to initialize the private field. I find this personally weird and distasteful, but that’s the enterprise Java ecosystem for you.

However, you can still use both constructor and field injection in the same class. We’ll see in the example code where we use constructor injection in our smaller tests, and field injection in our larger tests.

Dependency Injection (continued again)

Here’s the ultimate point of dependency injection:

Prefer interface-based composition to implementation inheritance because it’s more explicit, understandable, flexible, less error-prone due to invisible default behaviors—and builds faster.

Interfaces/Seams + Composition >>> Implementation Inheritance

Let’s break down why this is the case. I’m going to break the golden rule of not reading a slide verbatim, because I think it’s worth being totally explicit here, however boring.

- The biggest obstacle to good testing is large, complicated, slow, unreliable dependencies that you can’t control—especially inherited ones.

- The next biggest obstacle is using large tests by default for everything and making them too specific to remain reliable as the system evolves.

- Interfaces/seams enable composable design via dependency injection.

- DI provides control over dependency behavior in tests, enabling thorough testing of each component in isolation, reducing reliance on larger tests.

Any questions or comments?

[ WAIT FOR RESPONSES ]

Overview of Example Architecture and Technology

Now let’s see these concepts in action in the example project.

Goals of the Example Project

As we skim through the code, please keep in mind the specific goals I was aiming for while creating it. Again, I’m going to be boring and just read the points here.

I wanted to…

- Go beyond a basic, isolated exercise to demonstrate tests at every level of the Test Pyramid—showing how unit testing is essential to the big picture

- Resemble a production application, with minimal functionality and complexity to allow the design and testing strategy to stand out

- Show how to develop and test every level of the system independently, while integrating into a cohesive whole—via interfaces, dependency injection, test doubles

- Provide ample examples and documentation to support further study

Full Test Suite and App Demo

github.com/mbland/tomcat-servlet-testing-example

Here we go…

[ FOLLOW LINK AND SKIM AROUND USING THE FOLLOWING SLIDES TO ANCHOR THE OVERVIEW ]

Example Architecture and Technology

- Build system: Gradle 8.5 + pnpm 8.11

- Backend: Java 21, Tomcat

- Frontend: JavaScript 2023, JavaScript Modules, Handlebars.js

- Frontend development + build: Vite, rollup-plugin-handlebars-precompiler

Example Testing Technology

- Java small + medium tests: JUnit 5.0, Weld (for prod dependency injection), Hamcrest matchers

- Java large end-to-end tests: Selenium WebDriver, headless Chrome for Testing

- Frontend tests: Vitest, JSDom, headless Chrome for Testing

- Continuous Integration and reporting: GitHub Actions

- Coverage reporting: JaCoCo (Java), Node.js + Istanbul (JS), coveralls.io

Reusable Test Helpers and Other Packages

Utilities that have proven useful beyond a single project

- test-page-opener (npm): enables validating initial page state without Selenium

- rollup-plugin-handlebars-precompiler (npm): began as a test helper, led to core architectural pattern

TestTomcat(Java): runs Tomcat in the same process for medium JUnit and large Selenium tests

Tomcat Servlet Testing Example technology matrix

Here’s a matrix mapping most of these technologies to their role in the frontend or backend relative to each test size.

The same information as above, but in a scrollable HTML table:

| Size | Frontend / JavaScript / pnpm | Backend / Java / Gradle + pnpm |

|---|---|---|

| Large (System, E2E) |

N/A | Selenium WebDriver TestTomcat, Weld, headless Chrome (No code coverage for large tests) |

| Medium (Integration) |

Vitest + Vite JSDom, chrome, test-page-opener Istanbul, Node.js |

JUnit 5, Hamcrest matchers TestTomcat, StringCalculator doubles JaCoCo (for smaller medium tests) |

| Small (Unit) |

Vitest + Vite JSDom, headless Chrome Istanbul, Node.js |

JUnit 5 No test helpers in this example JaCoCo |

Key:

- Testing framework

- Test helpers

- Coverage library

Other info:

- CI: GitHub Actions

- Coverage report: coveralls.io

Gradle configuration

strcalc/build.gradle.kts, et. al.

- Also:

settings.gradle.kts,gradle.properties,gradle/libs.versions.toml - Builds and tests both the frontend and backend project components

- Centered around the Gradle War Plugin—frontend builds into

strcalc/build/webapp, backend Tomcat config instrcalc/src/webapp - Custom schema to separate Small/Medium/Large Java tests

- Several other little tricks, documented in the comments

Handlebars component pattern

Wraps the Handlebars API to precompile + bundle via Vite, Rollup

- Handlebars templates reside in

*.hbsfiles - Components using them reside in corresponding

*.jsfiles - Vite/Vitest uses rollup-plugin-handlebars-precompiler to transform

*.hbsfiles into JavaScript modules containing precompiled Handlebars templates - The

*.hbsfiles are imported directly,Template()returns DocumentFragment - Each

*.hbs+*.jscomponent tested by corresponding*.test.js

Page Object Pattern

selenium.dev/documentation/test_practices/encouraged/page_object_models

- Useful for large Selenium tests, some small frontend component tests

- Encapsulates details of a specific page’s structure

- Exposes meaningful methods used to write tests

- Don’t contain assertions, but make test assertions easier to write and read

- Can be composed of Page Objects representing individual components (though this example doesn’t go that far)

Test-Driven Development with the String Calculator Kata

Now that you’ve got the lay of the land, let’s add the core business logic to our application using Test-Driven Development.

The Red-Green-Refactor cycle

The core process of TDD consists of three steps known as the “Red-Green-Refactor” cycle:

- Write a failing test before writing the production code.

- Make the test pass by writing only enough production code to do so.

- Improve code quality by refactoring the existing code before writing the next failing test.

Any questions so far?

[ WAIT FOR RESPONSES ]

Refactoring

As defined by Martin Fowler in Refactoring

For those who are familiar with the term, how would you define “refactoring”?

[ WAIT FOR RESPONSES ]

This is how Martin Fowler, the author of the Refactoring book, defines the term:

Refactoring is a disciplined technique for restructuring an existing body of code, altering its internal structure without changing its external behavior.

Its heart is a series of small behavior preserving transformations. Each transformation (called a “refactoring”) does little, but a sequence of these transformations can produce a significant restructuring. Since each refactoring is small, it’s less likely to go wrong. The system is kept fully working after each refactoring, reducing the chances that a system can get seriously broken during the restructuring.

I placed the emphasis on “small behavior preserving transformations” and “the system is kept fully working.” Refactoring is not rewriting the whole thing, or breaking it for days or weeks on end. Having good tests is essential to being able to refactor a little bit at a time, all the time, to keep code healthy. This way you aren’t ever tempted to attempt big, risky rewrites.

Any thoughts or questions about refactoring?

[ WAIT FOR RESPONSES ]

String Calculator Kata, Step 1

Let’s dive into our TDD example, the String Calculator by Roy Osherove.

- Create a StringCalculator with the method:

int Add(String numbers) - Take up to two numbers, separated by commas, and return their sum

- For example: “” (return 0) or “1” or “1,2” as inputs

- Start with the empty string, then move to one number, then and two numbers

- Solve things simply; force yourself to write tests you might not think about

- Refactor after each passing test

Setup Process

This is how we’re going to get started:

git clone https://github.com/mbland/tomcat-servlet-testing-example- Set up and test basic infrastructure (this part’s already done)

- Write a test that fails to compile—compilation failures are failures!

- Write just enough code for the test to compile and pass

- It make take a while to set up new infrastructure, but it pays off immediately

Setup Process Demo

[ PERFORM THE FIRST PART OF THE DEMO, RUNNING ALL TESTS AS THE FIRST STEP ]

Setup Process Steps

Breaking it down microscopically

Here you can see this is exactly what I just did.

| Build, then add empty test | ✅ |

| Instantiate class | ❌ |

| Create class | ✅ |

Call calc.add(""), expect 0 |

❌ |

Create calc.add(), return 0 |

✅ |

I made sure the project could build before I did anything else, then followed the steps to introduce a failing test before making a change. Any questions?

[ WAIT FOR RESPONSES ]

Red-Green-Refactor Process

Now I’ll follow the process to add one piece of behavior at a time:

- Decide on a behavior to implement

- Write a failing test for the new piece of behavior

- Write just enough code to get the new test to pass

- Improve the existing code if desired, ensuring all tests continue to pass

- Repeat for next behavior

Red-Green-Refactor Process Demo

[ PERFORM THE SECOND PART OF THE DEMO, RUNNING ALL TESTS AS THE LAST STEP ]

Red-Green-Refactor Process Steps

Again, you can see this is exactly what I just did.

| Add test for “1” | ❌ |

| Implement behavior | ✅ |

| Add test for “1,2” | ❌ |

| Implement behavior | ✅ |

| Refactor | ✅ |

Add a failing test, add enough code to make it pass while ensuring all other tests pass, then refactor if desired. We proceed methodically, leaving things broken for only a few minutes or seconds. That way we remain in control of the changes we’re making at every step.

Questions?

[ WAIT FOR RESPONSES ]

String Calculator Kata Exercise Instructions

Now it’s your turn to have some fun!

[ BRIEFLY REVIEW THE GUIDANCE BEFORE BEGINNING THE EXERCISE ]

git clone https://github.com/mbland/tomcat-servlet-testing-example- Consider finding a partner to pair program (encouraged, not required)

- Try to finish step 2: Allow

add()to handle an unknown amount of numbers - Go further if there’s time, but don’t feel pressured to do the whole thing

- Call out if you have any questions or need any assistance

- See you in 15 minutes!

[ ASK RETURNING PARTICIPANTS HOW THE EXERCISE WENT ]

Really Tying the Room Together

Now that you’re aware of the Test Pyramid, and have seen it in action, I’d like to share some more helpful details and guiding principles.

StringCalculator could be…

…any self-contained component of business logic

For our purposes, StringCalculator represents any arbitrary dependency or

piece of business logic within an entire system. The point is that with the

right interface, we can work more easily with any implementation, such as:

- A Database or HTTP API access layer

- A service/servlet from another process, local or remote

- A backend…which we’re already substituting in the frontend!

- A test double…which we’re already using in

ServletContractTest!

Any questions or comments?

[ WAIT FOR RESPONSES ]

Testing, “Mocking,” and DI Frameworks

Here are a few things to keep in mind about the various frameworks that are available to help you write tests.

-

Testing frameworks help organize common setup, teardown, and other details into “test fixtures” to keep individual test cases small and focused

Actual basic testing frameworks exist to help organize your individual test cases while sharing common resources and setup and teardown methods. These clusters of shared resources and methods are known as “test fixtures”. -

JUnit, Vitest, and many others descend from the xUnit concept

Most common testing frameworks descend from the popular xUnit pattern in some way, the most obvious and well known being JUnit. However, even more recent frameworks like pytest, mocha, Vitest, etc., still implement many core xUnit concepts in their own way. -

“Mocking” frameworks are helpful for creating test doubles, but not essential

So-called “mocking” frameworks make it easier to write and maintain test doubles. I say so-called because a “mock” is only one kind of test double, and is actually the kind you should use as a last resort. Stubs, spies, and fakes are preferable in most cases. Either way, “mocking” framework is the name that has stuck, and while these frameworks are often quite convenient, they’re not essential. You can still write your own test doubles without them if need be. -

You don’t need a dependency injection framework—

main()will do…

In general, a dependency injection framework is never really necessary. You can always wire up your object graph inmain()or using your testing framework’s test fixture mechanism. -

…unless a Java library practically forces you to use one (e.g., Tomcat + Weld)

However, you may encounter DI frameworks in the Java world, especially when implementing code to plug into an enterprise framework like Tomcat. Technically, you can still apply DI without a framework even in this case, but it’s arguably better to just reach for the nearest framework instead.

Any questions?

[ WAIT FOR RESPONSES ]

Test Fixtures

Shared state, helpers, and lifecycle methods

Let’s learn some details about how JUnit and Vitest, in particular, help us organize our test cases into test fixtures.

-

describein Vitest, normal class with@Testmethods in JUnit

Test fixtures are encapsulated withindescribeblocks in Vitest. Old versions of JUnit used to require inheriting from a base class, and some other common frameworks still require this. JUnit 5 however considers any class containing a method with an@Testannotation to be a test fixture. -

Add data members, objects, and helpers as necessary

Fixtures should contain any data members, objects, and helper functions or objects your test cases require. The testing framework will use the fixture object to set up and tear down these shared resources automatically. -

beforeAllruns before all test methods,beforeEachruns before each

In both frameworks, you’ll see functions named or annotated with the namebeforeAllorbeforeEach. The framework callsbeforeAllto set up any necessary state before running any of the test case methods, andbeforeEachbefore every one of them. -

Converse for

afterEach,afterAll

The converse is true of theafterEachandafterAllmethods, which are used to clean up any state generated by test cases. -

setUpandtearDownvariations correspond tobeforeEach,afterEach

These historically used to be namedsetUpandtearDown, but thebeforeandafternames have become more popular recently. -

before/aftermethods ensure consistent environment for every test case

The point of thebeforeandaftermethods is to ensure every test case runs under a consistent and correct environment.

In summary, test fixtures keep the logic for each test case small, focused, and separate from other test cases. This makes tests easier to write, understand, and maintain. Questions?

[ WAIT FOR RESPONSES ]

Test Naming

No, a rose by any other name will not smell as sweet.

Good names for your tests can go a long way towards helping people understand the expected behavior of the code under test. Choose them carefully.

-

Include the class and/or function or method name in the fixture name

The name of the class, function, or method under test should appear in the name for the entire fixture. This helps keep the individual test case names short and focused. -

Have the name tell you something concrete when you read it and when it fails

For test case names in particular, make sure it says something clear and concrete about the behavior under tests and the expected outcome. Good tests will fail when they should, and good names help you diagnose and fix problems efficiently. -

A bad test case name requires deeper investigation:

testFoo()

Consider a bad test name such astestFoo(). What is that supposed to tell us, especially if it fails? -

Better test case names in

FooTestfixture indicate nature of tests/failures:returnsBarOnSuccess(),throwsFooExceptionIfBarUnavailable()

On the other hand, if thereturnsBarOnSuccess()test case fails, we know that the functionFoo()didn’t succeed or returned the wrong value. Or ifthrowsFooExceptionIfBarUnavailable()fails,Foo()threw the wrong exception, or theBarservice was unexpectedly available. Already we’ve got lots more information before even looking at the failure details than we’d ever get fromtestFoo().

Questions?

[ WAIT FOR RESPONSES ]

Test Organization

Make tests readable by making information explicit, yet concise.

Choosing good names also coincides with organizing test logic well.

-

Long test cases with short names are doing too much to understand easily…

If you see a long test function with a short name, it’s almost certainly doing too much. Diagnosing failures is going to take more work than should be necessary. -

…but don’t go overboard with overly microscopic cases and long names

At the same time, it’s possible to split hairs to the point of obscuring meaningful behaviors. Test cases should be short and sweet, but not necessarily microscopic. Long names can become almost as difficult to parse as long test functions. -

Test cases can look very similar, so long as differences jump out

It’s OK for test cases to look like they duplicate some code, as they may contain similar helper and assertion calls. This isn’t a problem as long as the differences between helper and assertion parameters easily stand out. -

Avoid conditionals—test cases should read and behave like individual requirements

Having any sort of branches in immediate test case logic is an antipattern. Well written tests don’t force the reader to reason about what the test is actually doing, but essentially declare expected behaviors. -

Write setup helpers, custom assertions for common operations (possibly containing conditionals)

Custom helper functions, classes, and assertions also help keep test cases concise and readable. They can contain conditional logic if need be, though branches and loops shouldn’t execute based on state beyond the control of the test fixture.

Questions?

[ WAIT FOR RESPONSES ]

Avoid table-driven tests

Wrong trade-off between duplication and maintainability

Some people believe that it’s useful to organize test inputs and outputs as a table, implemented by iterating over an array of structures. While this may reduce some duplication, it’s frequently the wrong tradeoff, and can prove less maintainable over time.

-

Tables can be OK in some cases, but are often the wrong choice

Sometimes table-driven tests aren’t so bad, especially if the interface and underlying behavior is relatively narrow and stable. They’re still the wrong choice for readable, maintainable tests more often than not. -

They reduce duplication, but break when input+expected data structures change, often contain conditionals, are harder to understand when they fail

This is because even though they may reduce some duplication, they’re easily broken when things change. Updates to the inputs or expected values of the structure can require updating all the elements in the table. The function iterating over the table may contain conditionals based on the inputs, which makes understanding the test more difficult. When such tests fail, it can require extra work to identify what about the input records caused the test function to fail. -

Prefer separate, individual test cases to avoid lazy, sloppy testing

Iterating over a bunch of table records usually isn’t necessary to achieve proper test coverage. It may be a sign of laziness, whereas separate, individual test cases are usually more clear while requiring fewer inputs to achieve sufficient coverage. -

Prefer to write custom validation functions with meaningful names instead

At the very least, writing separate individual validation functions with good names can avoid the need for inline conditional logic. -

Then collapse very closely related inputs into related cases if appropriate

You can then collapse closely related input structures into separate test cases, and invoke the appropriate custom validator functions for each. This can help tests become reasonably concise, while ensuring multiple related input cases are handled consistently.

Questions?

[ WAIT FOR RESPONSES ]

Test-Driven Development

Essential principles

Let’s reflect upon the reasons why we should consider adopting Test-Driven Development as an everyday practice. We’ll start by considering how it helps us follow key principles that generally lead to positive outcomes.

-

Essentially about reducing unnecessary complexity, risk, waste, and suffering

The biggest principle is that it helps us reduce unnecessary complexity, risk, waste, and suffering by helping catch most problems and errors immediately. Every bug we catch with a test before merging or deploying is one that won’t cost us time, money, sleep, and sanity later. -

Is an effective methodology to aid understanding—not a shortcut or a religion

It also helps us increase and maintain our understanding of the system, and foster a shared understanding across the team as well. It’s not a shortcut in any way, and definitely not a religion, as it involves challenging our assumptions about reality, not taking them on faith. -

More about developing a “Quality Mindset” than a specific technique or tech

Ultimately TDD is about developing a “Quality Mindset” to ensure and maintain high software quality over time. This mindset isn’t tied to any specific technique or technology. -

Is most accessible to newcomers via writing small unit tests…

It’s easiest for newcomers to learn about TDD and automated testing in general by starting to write small unit tests. -

…but applies to system design and testing at all levels of the Test Pyramid

However, the same general methodology and mindset applies when implementing any production code and tests at all levels of the Test Pyramid.

Questions?

[ WAIT FOR RESPONSES ]

Test-Driven Development goals

What we want to achieve on a technical level

Now let’s consider some of the technical benefits that Test-Driven Development affords.

-

Focus on and simplify one behavior/problem at a time

By helping us build a comprehensive test suite over time, TDD also helps us to focus on a single behavior or problem at a time. If we break any tests, we can undo the damage easily, so we don’t have to worry about every system detail all at once. -

Make it easy to keep tests passing by not doing too much at once

Because we’re only doing one thing at a time, it’s also much easier to keep all the existing tests passing. Again, if a test fails, we know immediately, and we know it’s because of what we just did right now. We don’t have to untangle a web of possibilities, because we were only doing one thing at the moment. -

Keep tests isolated from each other, keep them fast

TDD encourages tests that are isolated from one another and are very fast. This stability and speed means we can run most tests all the time while we’re developing, further reducing the possibility of introducing defects. -

Control your dependencies so they don’t control you—flakiness is the enemy

TDD encourages you to ensure that your dependencies are under control by encouraging good interface design and the use of dependency injection. Controlling inputs to the code under test is key to ensuring tests don’t become complicated, slow, and flaky. -

Encourage the Single Responsibility Principle, composition, polymorphism

TDD encourages the Single Responsibility Principle, which is essentially ensuring that a piece of code is focused, simplified, and not doing too much at once. Applying good interface design and dependency injection helps ensure test isolation by encouraging a composition based design. Test doubles, in particular, rely on the polymorphism enabled by dependency injection in a composition based design. (This is also known as the Liskov Substitutability Principle.) -

See: SOLID principles, FIRST principles

All of these benefits are also summed up by several sets of general principles, including SOLID and FIRST, that you can read about online.

Questions?

[ WAIT FOR RESPONSES ]

Benefits of TDD/Testing/Testable Code

What we want to achieve on a business/quality of life level

So what do these general principles and technical benefits end up buying us, ultimately? What’s ultimately in it for us?

-

Testable code/architecture is maintainable—tests add design pressure, enable continuous refactoring

Tests apply design pressure to ensure that the code we write is testable. Both the interfaces and implementation details of the code become easier to understand. A suite of fast, stable tests also enables us to maintain a high degree of code quality through continuous refactoring. -

Increased ability to work independently, especially for new hires

A good suite of tests reduces the time required to train and supervise new hires when it comes to making changes to the system. They can see what good code and tests look like from the start, and can fix their own mistakes independently. -

Streamlined code reviews by keeping focus on new/changed code

Combined with linting and static analysis tools, good tests streamline the code review process by keeping the focus on the new or changed code. The pressure is off the humans to catch problems detectable by automation. Reviews then become about whether the change is the right thing to do, or if there’s a better way to do it. -

Instill greater confidence and enable faster feature velocity, better reliability

Having fast, reliable tests pass with every change over time gives everyone greater confidence in the health of the system and in each new change. While it requires investment to reach this point, the payoff comes in greater long term feature velocity and reliability. -

Increased understanding—reduced complexity, risk, waste, and suffering

Essentially all of the benefits listed so far are a consequence of increased understanding of the system and the changes made to it over time. This, in turn, reduces complexity, risk, waste, and suffering, as high code quality helps developers prevent most problems. People end up with more bandwidth to handle any problems that still arise, which also arise at a far more manageable rate.

Questions?

[ WAIT FOR RESPONSES ]

Test First vs. Test With

Don’t think that if you don’t test first, you don’t test at all.

Traditional Test-Driven Development does encourage writing the tests before the production code. However, don’t get hung up on thinking that if you don’t test first, you can’t test at all.

-

Test-First is great if you already know what you want, or if it helps you think through what you want

If you already know what you want to implement, or always writing the test first feels helpful, by all means, keep writing the tests first. -

Test-With requires more careful validation of results, but may fit your brain better than Test-First most of the time

However, it may feel more natural to start writing a bit of production code first to think things through. In that case, just make sure to write the tests as soon as you’ve written something you’re happy with. You may have to be more careful to ensure you’ve written enough tests and achieved enough code coverage, but such effort is definitely manageable. -

It’s more essential that there are good, fast, thorough, understandable, reliable tests with your changes than exactly when you wrote them.

It’s most important that there are good, fast, thorough, understandable, reliable tests with your code when you send it for review and merge it. When you wrote the tests is not as critical.

Questions?

[ WAIT FOR RESPONSES ]

Exploration, Settlement, and Vision

Writing the right tests when the time’s right

As a matter of fact, I like to think of software development happening in three phases—and I don’t necessarily advise testing during the first.

| Phase | Focus | To test or not to test? |

|---|---|---|

| Exploration | Learning the shape of the problem and solution spaces, rapid prototyping | Not: Still learning basic things, don’t commit too early |

| Settlement | Parts of the problem coming into focus, beginning to depend on core components | Test: Core components must keep working while exploring further |

| Vision | All expectations and the end goal are clear | Test: Confirm expectations hold up to reality, ensure others aligned with vision |

-

In the Exploration phase, you’re only beginning to learn the shape of the problem you need to solve and its possible solutions. You should be prototyping quickly to learn basic facts and gain early experience rather than committing to an implementation too early. Any tests you write you might throw away once you learn a little bit more about the problem space.

-

In the Settlement phase, certain parts of the problem are coming into focus, as are certain parts of the solution. Core components and abstractions emerge, upon which other parts of the system will depend. At this point, you absolutely should test these components to ensure they keep working as you further explore other parts of the system.

-

In the Vision phase, practically the entire problem and its solution has come into focus. Now it becomes critical to test, to confirm that your expectations hold up to reality. Testing also becomes critical to ensure other programmers stay aligned with your vision and don’t make breaking changes.

Bear in mind that these phases aren’t necessarily linear. You will cycle through them as you focus on implementing different parts of the system. The idea is to be aware of which phase you’re in for the piece you’re working on at the moment. You can set aside testing when you’re still exploring, but had better get to it once you notice yourself settling on or envisioning a solution.

Questions?

[ WAIT FOR RESPONSES ]

Continuous integration/short lived branches

Break early, break often, fix immediately

Let’s see how automated testing also enables a continuous integration model that supports creating and merging many short lived branches.

-

gitis designed to enable frequent merging of many branches

Assuming you’re usinggitfor source control, it was designed from the beginning to make creating and merging branches incredibly easy. -

Small, short-lived branches ensure changes stay in sync with one another

The idea is that by making branching and merging easy, you can do it all the time. This helps avoid the risk of long lived branches growing out of sync, creating integration nightmares. -

Automated testing is essential to validating this merging and synchronization

However, frequently merging branches and synchronizing changes between different developers still carries a degree of risk. Automated testing mitigates this risk, as developers will add or modify any tests as necessary to accommodate the changes within their branches. -

Continuous integration provides the system of record and source of truth

The continuous integration system that performs and/or validates every merge is the system of record and source of truth for the health of the system. The more fast and reliable the tests, the more efficient, trustworthy, and valuable the CI system becomes, making everyone more productive. -

Short-lived branches + automated testing == continuous integration

To sum up, combining many short lived branches with good automated testing yields a fast, reliable continuous integration system that helps everyone be more productive. -

Attention remains focused on code review, not fear or debugging

This is especially critical to ensure code reviews receive the focused attention they deserve, undiluted by the fear of making changes or avoidable debugging efforts.

Questions?

[ WAIT FOR RESPONSES ]

Code Coverage

Use it as a tool to provide visibility, not merely as a goal

We need to talk about code coverage, or the amount of code executed by a test or test suite. It’s often considered a goal to achieve by any means necessary or a useless metric. The truth is that it’s merely a tool, like a ruler, to provide visibility into where your testing may fall short.

-

100% is ideal, not strictly necessary—but exceptions should be noted

Achieving 100% coverage is a fine goal, but isn’t always practical. However, any exceptions should be noted, and possibly excluded from collection, making 100% coverage effectively possible. -

It can tell you when you’re definitely not done, not when you are done

Code coverage can’t really tell you when you’re done testing. It only definitively shows when you’re not done, by showing which code isn’t exercised by a test. -

How you get it is more important than how much you get

The raw code coverage percentage measurement carries very little meaning on its own. The important thing is how you reach a certain level of coverage. -

Collect from small-ish tests, each covering little, collectively covering much

You really only want to collect code coverage from small to medium-small tests, which individually only cover a small amount of code. Then the entire suite of small to medium-small tests should cover the entire code base in aggregate. -

Large-ish tests should define contract/use case coverage, not code coverage

Code coverage from large-ish tests is effectively useless, because they execute so much code at once without covering fine details and corner cases. These tests should focus on interface contract or use case coverage instead, as defined by requirements, specifications, use cases, or sprint features. -

Can help with aggressive refactoring when done well (extracting objects, etc.)

Despite these caveats, high code coverage collected properly can help you refactor aggressively, early and often, to keep code quality as high as possible. This translates into an improved process, product, and quality of life for everyone involved.

Questions?

[ WAIT FOR RESPONSES ]

The Quality Mindset

Transcending test sizes, technologies, or application domains

I’d like to leave you with a few last guiding principles that can help you and your colleagues adopt the Quality Mindset. These aren’t all specific to automated testing, but they all support better testing, and better testing supports them all.

-

Don’t submit copy/pasted code! Duplication hides bugs. (e.g., goto fail)

Do not submit duplicated code! Our brains like to detect patterns as a shortcut to understanding, and we can miss critical details when our brains see copy/pasted code. For a prime example, see the writeup I did on Apple’s 2014goto failbug, “Finding More Than One Worm in the Apple”. -

Send several small, understandable changes for review, not one big one

Try to keep code reviews small and focused, rather than cramming many changes into one. Build up a larger change from several small ones. This helps ensure that each detail receives the attention it deserves. You may also find it helps you design for testability, and write better tests due to this design pressure. (Exceptions to this advice would include large renaming changes or a large deletion. But each such change should still be in its own code review, not mixed with other changes.) -

Reproduce bugs with the smallest test possible, then fix them

Strive to reproduce every bug with the smallest test possible before fixing it. Doing so both validates that the fix works, and prevents a regression of that particular bug as long as the test remains in the suite. -

Strive to minimize unnecessary complexity, risk, waste, and suffering!

Apply the automated testing principles and practices from this presentation to minimize unnecessary complexity, risk, waste, and suffering, for yourselves and your customers. -

Strive to maximize clarity, confidence, efficiency, and delight!

Doing so will also maximize clarity, confidence, efficiency, and delight—which is why we all started writing software to begin with, right?

mike-bland.com

/test-pyramid-in-action

/making-software-quality-visible

Thank you so much for allowing me to share these ideas with you today. You can find the full presentation and example project on my blog at mike-bland.com/testing-pyramid-in-action. If you want to go even deeper down the rabbit hole, please visit mike-bland.com/making-software-quality-visible.

Please don’t hesitate to reach out to me if you’ve questions or want to discuss these ideas further. You’ll find my contact information on the blog as well.

Thank you—and good luck!

Footnotes

-

“Flaky” means that a test will seem to pass or fail randomly without a change in its inputs or its environment. A test becomes flaky when it’s either validating behavior too specific for its scope, or isn’t adequately controlling all of its inputs or environment—or both. Common sources of flakiness include system clocks, external databases, or external services accessed via REST APIs.

A flaky test is worse than no test at all. It conditions developers to spend the time and resources to run a test only to ignore its results. Actually, it’s even worse—one flaky test can condition developers to ignore the entire test suite. That creates the conditions for more flakiness to creep in, and for more bugs to get through, despite all the time and resources consumed.

In other words, one flaky test that’s accepted as part of Business as Usual marks the first step towards the Normalization of Deviance.

There are three useful options for dealing with a flaky test:

- If it’s a larger test trying to validate behavior too specific for its scope, relax its validation, replace it with a smaller test, or both.

- If what it’s validating is correct for its scope, identify the input or environmental factor causing the failure and exert control over it. This is one of the reasons test doubles exist.

- If you can’t figure out what’s wrong or fix it in a reasonable amount of time, disable or delete the test.

Retrying flaky tests is NOT a viable remedy. It’s a microcosm of what I call in this presentation the “Arms Race” mindset. Think about it:

- Every time a flaky test fails, it’s consuming time and resources that could’ve been spent on more reliable tests.

- Even if a flaky tests fails on every retry, people will still assume the test is unreliable, not their code, and will merge anyway.

- Increasing retries only consumes more resources while enabling people to continue ignoring the problem when they should either fix, disable, or delete the test.

- Bugs will still slip through, introduce risk, and create rework even after all the resources spent on retries.

-

The last thing you want to do with a flaky or otherwise consistently failing test is mark it as a “known failure.” This will only consume time and resources to run the test and complicate any reporting on overall test results.

Remember what tests are supposed to be there for: To let you know automatically that the system isn’t behaving as expected. Ignoring or masking failures undermines this function and increases the risk of bugs—and possibly even catastrophic system failure.

Assume you know that a flaky or failing test needs to be fixed, not discarded. If you can’t afford to fix it now, and you can still afford to continue development regardless, then disable the test. This will save resources and preserve the integrity of the unambiguous pass/fail signal of the entire test suite. Fix it when you have time later, or when you have to make the time before shipping.

Note I said “if you can still afford to continue development,” not “if you must continue development.” If you continue development without addressing problems you can’t afford to set aside, it will look like willful professional negligence should negative consequences manifest. It will reflect poorly on you, on your team, and on your company.

Also note I’m not saying all failures are necessarily worthy of stopping and fixing before continuing work. The danger I’m calling out is assuming most failures that aren’t quickly fixable are worth setting aside for the sake of new development by default. Such failures require a team discussion to determine the proper course of action—and the team must commit to a clear decision. The failure to have that conversation or to commit to that clear decision invites the Normalization of Deviance and potentially devastating risks. ↩

-

I first learned about this concept from an Apple internal essay on the topic. ↩

-

Frequent demos can be a very good thing—but not when making good demos is appreciated more than high internal software quality and sustainable development. ↩