This twelfth post in the Making Software Quality Visible series begins the next major section, The Test Pyramid and Vital Signs. We’ll use the Test Pyramid model and test sizes to introduce the principles underlying a sound testing strategy. We’ll also discuss the negative effects that its opposite, the Inverted Test Pyramid, imposes upon its unwitting victims. I’ll then describe how to use “Vital Signs” to get a holistic view on software quality for a particular project.

This section is quite packed with information, including several footnotes I’ll break out into separate posts. Today’s post will introduce the fundamental concept of the Test Pyramid by way of the positive “Chain Reaction” we hope to create with it.

I’ll update the full Making Software Quality Visible presentation as this series progresses. Feel free to send me feedback, thoughts, or questions via email or by posting them on the LinkedIn announcement corresponding to this post.

Continuing after Quality Work and Results Visibility…

The Test Pyramid and Vital Signs

Two important concepts for making software quality itself actually visible at a fundamental level are The Test Pyramid and Vital Signs.

First, let’s understand the specific problems we intend to solve by making software quality visible and improving it in an efficient, sustainable way.

Working back from the desired experience

Inspired by Steve Jobs Insult Response

In this famous Steve Jobs video, he explains the need to work backward from the customer experience, not forward from the technology. So let’s compare the experience we want ourselves and others to have with our software to the experience many of us may have today.

| What we want | What we have |

|---|---|

| Delight | Suffering |

| Efficiency | Waste |

| Confidence | Risk |

| Clarity | Complexity |

-

What we want

We want to experience Delight from using and working on high quality software,1 which largely results from the Efficiency high quality software enables. Efficiency comes from the Confidence that the software is in good shape, which arises from the Clarity the developers have about system behavior. -

What we have

However, we often experience Suffering from using or working on low quality software, reflecting a Waste of excess time and energy spent dealing with it. This Waste is the result of unmanaged Risk leading to lots of bugs and unplanned work. Bugs, unplanned work, Risk, and fear take over when the system’s Complexity makes it difficult for developers to fully understand the effect of new changes.

Difficulty in understanding changes produces drag— i.e., Technical debt.

Difficulty in understanding how new changes could affect the system is the telltale sign of low internal quality, which drags down overall quality and productivity. The difference between actual and potential productivity, relative to internal quality, is technical debt.

This contributes to the common scenario of a crisis emerging…

Replace heroics with a Chain Reaction!

…that requires technical heroics and personal sacrifice to avert catastrophe. To get a handle on avoiding such situations, we need to create the conditions for a positive Chain Reaction.

By creating and maintaining the right conditions over time, we can achieve our desired outcomes without stress and heroics.

The main obstacle to replacing heroics with a Chain Reaction isn’t technology…

The challenge is belief—not technology

A little awareness goes a long way

…it’s an absence of awareness or belief that a better way exists.

-

Many of these problems have been solved for decades

Despite the fact that many quality and testing problems have been solved for decades… -

Many just haven’t seen the solutions, or seen them done well…

…many still haven’t seen those solutions, or seen them done well. -

The right way can seem easy and obvious—after someone shows you!

The good news is that these solutions can seem easy and obvious—after they’ve been clearly explained and demonstrated.2 -

What does the right way look like?

So how do we get started showing people what the right way to improve software quality looks like?

The Test Pyramid

A balance of tests of different sizes for different purposes

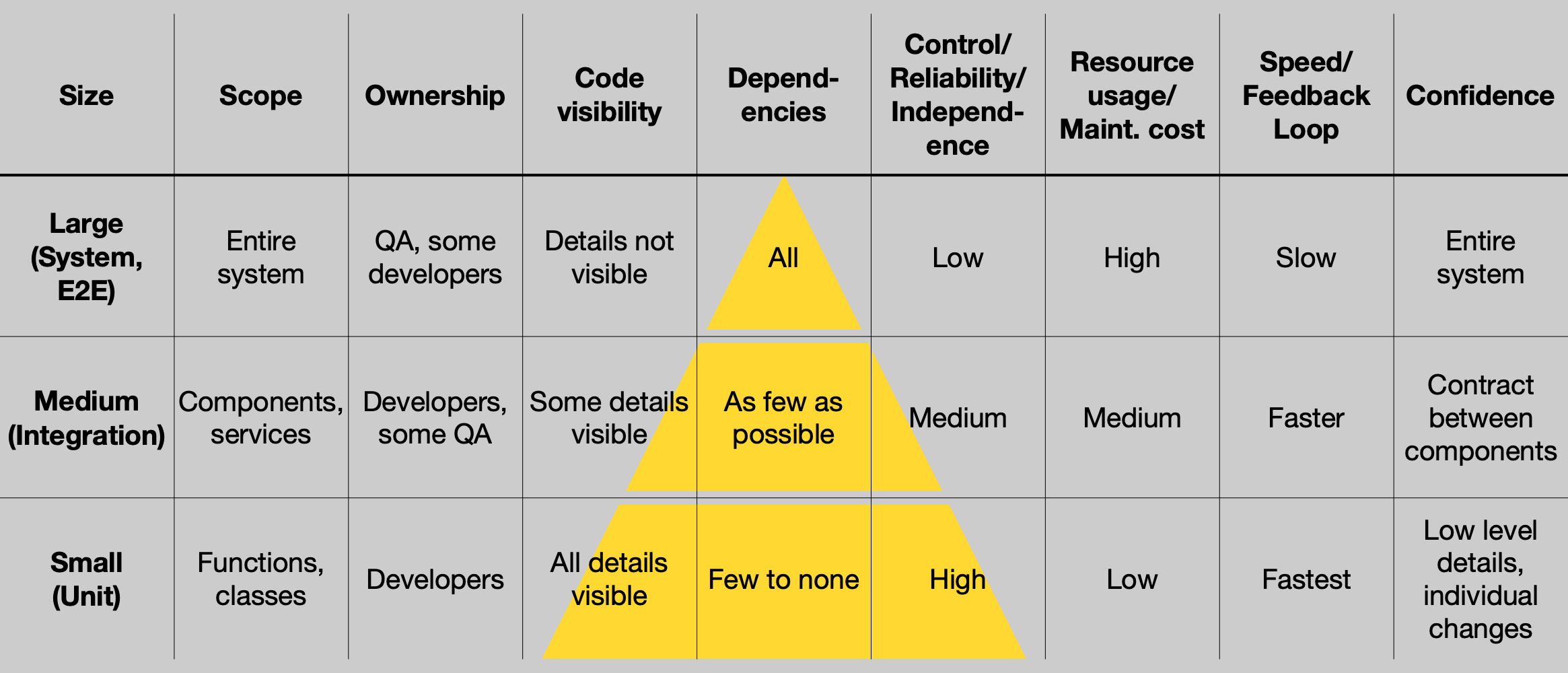

We’ll start with the Test Pyramid model,3,4 which represents a balance of tests of different sizes for different purposes.

Realizing that tests can come in more than one size is often a major revelation to people who haven’t yet been exposed to the concept. It’s not a perfect model—no model is—but it’s an effective tool for pulling people into a productive conversation about testing strategies for the first time.

(The same information as above, but in a scrollable HTML table:)

| Size | Scope | Ownership | Code visibility |

Dependen- cies |

Control/ Reliability/ Independ- ence |

Resource usage/ Maint. cost | Speed/ Feedback loop | Confidence |

|---|---|---|---|---|---|---|---|---|

| Large (System, E2E) |

Entire system |

QA, some developers |

Details not visible | All | Low | High | Slow | Entire system |

| Medium (Integration) |

Components, services | Developers, some QA | Some details visible | As few as possible | Medium | Medium | Faster | Contract between components |

| Small (Unit) |

Functions, classes | Developers | All details visible | Few to none | High | Low | Fastest | Low level details, individual changes |

The Test Pyramid helps us understand how different kinds of tests give us confidence in different levels and properties of the system.5 It can also help us break the habit of writing large, expensive, flaky tests by default.6

-

Small tests are unit tests that validate only a few functions or classes at a time with very few dependencies, if any. They often use test doubles7 in place of production dependencies to control the environment, making the tests very fast, independent, reliable, and cheap to maintain. Their tight feedback loop8 enables developers to detect and repair problems very quickly that would be more difficult and expensive to detect with larger tests. They can also be run in local and virtualized environments and can be parallelized.

-

Medium tests are integration tests that validate contracts and interactions with external dependencies or larger internal components of the system. While not as fast or cheap as small tests, by focusing on only a few dependencies, developers or QA can still run them somewhat frequently. They detect specific integration problems and unexpected external changes that small tests can’t, and can do so more quickly and cheaply than large system tests. Paired with good internal design, these tests can ensure that test doubles used in small tests remain faithful to production behavior.9

-

Large tests are full, end to end system tests, often driven through user interface automation or a REST API. They’re the slowest and most expensive tests to write, run, and maintain, and can be notoriously unreliable. For these reasons, writing large tests by default for everything is especially problematic. However, when well designed and balanced with smaller tests, they cover important use cases and user experience factors that aren’t covered by the smaller tests.

Thoughtful, balanced strategy == Reliability, efficiency

Each test size validates different properties that would be difficult or impossible to validate using other kinds of tests. Adopting a balanced testing strategy that incorporates tests of all sizes enables more reliable and efficient development and testing—and higher software quality, inside and out.

Background on Test Sizes

(This section appears as a footnote in the original.)

The Testing Grouplet introduced the Small, Medium, Large nomenclature as an alternative to “unit,” “integration,” “system,” etc. This was because, at Google in 2005, a “unit” test was understood to be any test lasting less than five minutes. Anything longer was considered a “regression” test. By introducing new, more intuitive nomenclature, we inspired productive conversations by rigorously defining the criteria for each term, in terms of scope, dependencies, and resources.

The Bazel Test Encyclopedia and Bazel Common definitions use these terms to define maximum timeouts for tests labeled with each size. Neither document speaks to the specifics of scope or dependencies, but they do mention “assumed peak local resource usages.”

Avoiding specific test proportions

(This section appears as a footnote in the original.)

I deliberately avoid saying which specific proportion of test sizes is appropriate. The shape of the Test Pyramid implies that one should generally try to write more small tests, fewer medium tests, and relatively few large tests. Even so, it’s up to the team to decide, through their own experience, what the proportions should be to achieve optimal balance for the project. The team should also continue to reevaluate that proportion continuously as the system evolves, to maintain the right balance.

I also have scar tissue regarding this issue thanks to Test Certified. Intending to be helpful, we suggested a rough balance of 70% small, 20% medium, and 10% large as a general target. It was meant to be a rule of thumb, and a starting point for conversation and goal setting—not “The One True Test Size Proportion.” But OMG, the debates over whether those were valid targets, and how they were to be measured, were interminable. (Are we measuring individual test functions? Test binaries/BUILD language targets like cc_test? Googlers, at least back then, were obsessed with defining precise, uniform measurements for their own sake.)

On the one hand, lively, respectful, constructive debate is a sign of a healthy, engaged, dynamic community. However, this particular debate—as well as the one over the name “Test Certified”—seemed to miss the point, amounting to a waste of time. We just wanted teams to think about the balance of tests they already had and needed to achieve, and to articulate how they measured it. It didn’t matter so much that everyone measured in the exact same way, and it certainly didn’t matter that they achieve the same test ratios. It only mattered that the balance was visible within each individual project—and to the community, to provide inspiration and learning examples.

Consequently, while designing Quality Quest at Apple, we refrained from suggesting any specific proportion of test sizes, even as a starting point. The language of that program instead emphasized the need for each team to decide upon, achieve, and maintain a visible balance. We were confident that creating the space for the conversation, while offering education on different test sizes (especially smaller tests), would lead to productive outcomes.

The worst model (except for all the others)

(This section appears as a footnote in the original. The title here is an allusion to a famous Winston Churchill quote.)

Some have advocated for a different metaphor, like the “Testing Trophy” and so on, or for no metaphor at all. I understand the concern that the Test Pyramid may seem overly simplistic, or potentially misleading should people infer “one true test size proportion” from it. I also understand Martin Fowler’s concerns from On the Diverse And Fantastical Shapes of Testing, which essentially argues for using “Sociable vs. Solitary” tests. His preference rests upon the relative ambiguity of the terms “unit” and “integration” tests.

However, I feel this overcomplicates the issue while missing the point. Many people, even with years of experience in software, still think of testing as a monolithic practice. Many still consider it “common sense” that testing shouldn’t be done by the people writing the code. As mentioned earlier, many still think “testing like a user would” is “most important.” Such simplistic, unsophisticated perspectives tend to be resistant to nuance. People holding them need clearer guidance into a deeper understanding of the topic.

The Test Pyramid metaphor (with test sizes) is an accessible metaphor for such people, who just haven’t been exposed to a nonmonolithic perspective on testing. It honors the fact that we were were all beginners once (and still are in areas to which we’ve not yet been exposed). Once people have grasped the essential principles from the Test Pyramid model, it becomes much easier to have a productive conversation about effective testing strategy. Then it becomes easier and more productive to discuss sociable vs. solitary testing, the right balance of test sizes for a specific project, etc.

Coming up next

There’s a substantial amount of information to unpack from several of the footnotes in this section. The next two posts will unpack the more technical footnotes:

Then I’ll return to the topic of the main obstacle to replacing heroics with a Chain Reaction being belief, not technology. I’ve a few footnotes on forces of human nature that I want to highlight in their own post:

Then I’ll pick up the main narrative again, explaining the common phenomenon of the “Inverted Test Pyramid” and all its familiar consequences.

After that, I’ll do a deep dive into the concept of “Vital Signs.”

As always, if the suspense is killing you, feel free to read ahead in the main Making Software Quality Visible presentation.

Footnotes

-

My former Google colleague Alex Buccino made a good point during a conversation on 2023-02-01 about what “delight” means to a certain class of programmers. He noted that it often entails building the software equivalent of a Rube Goldberg machine for the lulz—or witnessing one built by another. I agreed with him that, maybe for that class of programmers, we should just focus on “clarity” and “efficiency”—which necessarily excludes development of such contraptions. ↩

-

The Egg of Columbus parable is my favorite illustration of this principle. ↩

-

Thanks to Scott Boyd for reminding me to emphasize the Test Pyramid as a key component of the testing conversation. ↩

-

Reproducing my footnote from Automated Testing—Why Bother?: Nick Lesiecki drew the original testing pyramid in 2005. No idea if there was prior art, but he didn’t consult it.

The pyramid was later popularized by Mike Cohn in The Forgotten Layer of the Test Automation Pyramid (2009) and Succeeding with Agile: Software Development Using Scrum (2009). Not sure if Mike had seen the Noogler lecture slide or had independently conceived of the idea, but he definitely was a visitor at Google at the time I was there. ↩

-

The “confidence” concept in the context of the Test Pyramid was hammered out by Nick Lesiecki, Patrick Doyle, and Dominic Cooney in 2009. ↩

-

Thanks to Oleksiy Shepetko for mentioning the maintenance cost aspect during my 2023-01-17 presentation to Ono Vaticone’s group at Microsoft. It wasn’t in the table at that time, and adding it afterward inspired this new, broad, comprehensive table layout. ↩

-

Test doubles are lightweight, controllable objects implementing the same interface as a production dependency. This enables the test author to isolate the code under test and control its environment very precisely via dependency injection. (“Dependency injection” is a fancy term for passing an object encapsulating a dependency into code that uses it as a constructor or function argument. This replaces the need for the code instantiating or accessing the dependency directly.)

I’ll expand on this topic extensively in a future post. ↩

-

Shoutout to Simon Stewart for being a vocal advocate of shorter feedback loops. See his Dopamine Driven Development presentation. ↩

-

Contract tests essentially answer the question: “Did something change that’s beyond my control, or did I screw something up?”

I’ll expand on this topic extensively in a future post. ↩