Copyright 2023 Mike Bland, licensed under the Creative Commons Attribution 4.0 International License (CC BY 4.0).

This presentation, Making Software Quality Visible, is my calling card. It describes my approach to driving software quality improvement efforts, especially automated testing, throughout an organization—applying systems thinking and leadership to influence its culture.

If you’d be interested in my help, please review the slides and script below. If my approach aligns with your sense of what your organization needs, please reach out to me at mbland@acm.org. (Also see my Hire me! page for more information.)

Note as of 2023-09-13: I’m currently updating the official version to include new changes as I continue writing the Making Software Quality Visible blog series. The Google Drive copies will remain out of sync with the official version until I’ve finished, at which point I’ll remove this notice.

Slides

Original slides:

- Official version: Making Software Quality Visible Keynote presentation

- Keynote and PDF copies: Google Drive folder

Custom slides:

Table of contents

- Abstract

- Introduction

- Agenda

- Formative Experiences at Northrop Grumman, Google, and Apple

- Skills Development, Alignment, and Visibility

- The Test Pyramid and Vital Signs

- What Software Quality Is and Why It Matters

- Why Software Quality Is Often Unappreciated and Sacrificed

- Building a Software Quality Culture

- Calls to Action

- Acknowledgments

- History

- TODOs

- Footnotes

Abstract

We’ll discuss why internal software quality matters, why it’s often unappreciated and sacrificed, and what we can do to improve it. More to the point, we’ll discuss the importance of instilling a quality culture to promote the proper mindset first. Only on this foundation will seeking better processes, better tools, better metrics, or AI-generated test cases yield the outcomes we can live with.

Introduction

I’m Mike Bland, I’m a programmer, and I’m going to talk about how making software quality visible…

Software Quality must be visible to minimize suffering.

…will minimize suffering.1 By “suffering,” I mean: The common experience of software that’s painful—or even dangerous—to work on or to use.

By “software quality,” I mean: Confidence in the software’s behavior and user experience based on information and understanding. This is opposed to feeling anxious or overconfident about how the software will behave in the absence of information.

The way we produce accessible and useful information that enables understanding is by managing complexity effectively. If the information we need is obscured by complexity, we make bad assumptions and bad choices and suffer as a result. As we’ll see, software complexity is rooted not just in the code itself, but in our culture, which shapes our expectations, communications, and behaviors.2

Finally, by “making software quality visible,” I mean: Providing meaningful insight into quality outcomes and the work necessary to achieve them.

Quality work can be hard to see. It’s hard to value what can’t be seen—or to do much of anything about it.

This is important because it’s often difficult to show quality work, or its impact on processes or products.3 How do we show the value of avoiding problems that didn’t happen? This makes it difficult to prioritize or justify investments in quality, since people rarely value work or results they can’t see. Plus, people can’t effectively solve problems they can’t accurately sense or understand.

Agenda

There’s a big story behind how I arrived at these conclusions, and what I’ve learned about leading others to embrace them as well.

-

Formative Experiences at Northrop Grumman, Google, and Apple

I’ll share examples of making quality work visible to minimize suffering from my experiences at Northrop Grumman, Google, and Apple. -

Skills Development, Alignment, and Visibility

I’ll share some ideas for cultivating individual skill development, team and organizational alignment, and visibility of quality work and its results. -

The Test Pyramid and Vital Signs

We’ll use the Test Pyramid model to specify the principles underlying a sound testing strategy. We’ll also discuss the negative effects that its opposite, the Inverted Test Pyramid, imposes upon its unwitting victims. I’ll then describe how to use “Vital Signs” to get a holistic view on software quality for a particular project. -

What Software Quality Is and Why It Matters

We’ll define internal software quality and explain why it’s just as essential as external. -

Why Software Quality Is Often Unappreciated and Sacrificed

We’ll examine several psychological and cultural factors that are detrimental to software quality. -

Building a Software Quality Culture

We’ll learn how to integrate the Quality Mindset into organizational culture, through individual skill development, team alignment, and making quality work visible. -

Calls to Action

We’ll finish with specific actions everyone can take to lead themselves and others towards making software quality work and its impact visible.

Formative Experiences at Northrop Grumman, Google, and Apple

The story begins with the fact that programming wasn’t my original plan.

How it started…

From the beginning, I’ve always loved music—so much so that my first attempt at college led me to Berklee College of Music in Boston. It wasn’t just the beauty of the music that was attractive, or what I then believed to be the glamorous lifestyle. It was the idea of being part of a band, part of a team, pushing boundaries and achieving great things together.

Rockin’ my Mom’s shag-tastic living room with a little help from Elvis, 1974;

and Berklee College of Music, 1991.

Click either for a larger image.

Unfortunately—or fortunately?—the rock star plan didn’t work out, and I fell back on my second love: programming.4

Northrop Grumman Mission Systems

Navigation for US Coast Guard vessels and US Navy submarines

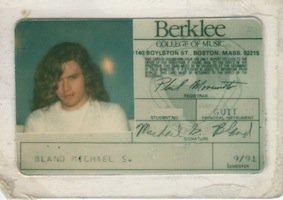

My first job as a software developer was working on US Coast Guard and US Navy nuclear submarine navigation systems at Northrop Grumman Mission Systems.

Digital Nautical Chart showing Fort Monroe and the north entrance to the

Hampton Roads Bridge—Tunnel in Hampton, Virginia. Adapted from the

original linked image from the Digital Nautical Chart® page of the National

Geospatial-Intelligence Agency. Note that there are new National

Geospatial-Intelligence and Digital Nautical Chart web sites, but I

couldn’t find the original artifacts on either site.

Coast Guardsmen aboard U.S. Coast Guard Cutter Monomoy (WPB 1326) and U.S. Navy

Los Angeles-class submarine, USS San Juan (SSN-751). I don’t know if software I

wrote ever ran on these specific ships, but it certainly ran on ships like them.

Click either for the original image.

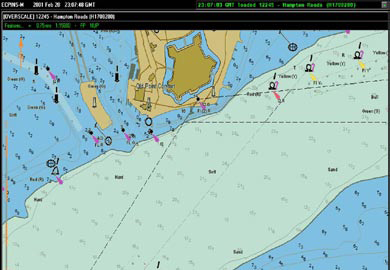

My “Library”

My colleagues at the time poked fun at me for carrying my “library” of programming and algorithms books with me everyday. I was learning what good code looked like by being able to see and understand what it looked like.

Each image shows the edition of the book I used at the time. However, each image

links to the most recent edition available at the time of writing. Note that the

“Exceptional C++” link is not HTTPS. Original sources for each image:

Effective C++;

The C++ Programming Language;

Modern C++ Design;

Introduction to Algorithms;

Exceptional C++;

Design Patterns.

Then, my team went through a “death march.”

Death March

Experiencing “suffering” in software

I actually never read this book, but I thought showing it here would underscore

how common the software death march experience really is. Original image source:

Death March cover from Amazon.

A “death march” is a crushing experience of working insane hours to deliver on impossible requirements by an impossible deadline. This usually involves changing a fragile, untested code base that barely works to begin with, which was the case for our project. But somehow, we did it. We met the spec by the deadline, and then were given the freedom to do whatever we could to make the damn thing faster.

During this period of relative calm, I began to learn a few things.

Expectations: Requirements + Assumptions

The reason why poor quality can lead to suffering

I began to learn the relationship between code quality and expectations, which is the sum of requirements and unwritten assumptions.5

| Requirement | Enumerate chart features |

|---|---|

| Assumption | In memory size == on disk size |

| Reality | 21 bytes on disk, 24 in memory |

| Outcome | File size/24 == 12.5% data loss |

| Impact | Caught before shipping! |

Requirement: One day our product owner sent us some code to enumerate nautical chart features from a file.

Assumption: The code assumed each record was the same size in memory as it was on disk.

Reality: However, the records were 21 bytes on disk, but the in memory structs were 24 bytes, thanks to byte padding.

Outcome: As a result, this code ignored one eighth of the chart features in the file. The product owner’s lack of complete understanding led to overconfidence that masked a potentially severe quality issue.

Impact: Fortunately I caught this before it shipped to any nuclear submarines.

Not long after that…

Discovering unit testing—by accident

Curiosity and serendipity, not training or culture

I discovered unit testing, completely by accident, from Koss and Langr’s C/C++ Users Journal article “Test Driven Development in C/C++”.

With my library and my newfound testing practice in hand, I rewrote an entire subsystem from the ground up. I’d apply a design principle, or an algorithm, and exercise it with focused, fast, thorough tests every step of the way, rather than waiting for system integration. I could immediately see the results to validate that each part worked as planned, and spend more time developing than debugging.

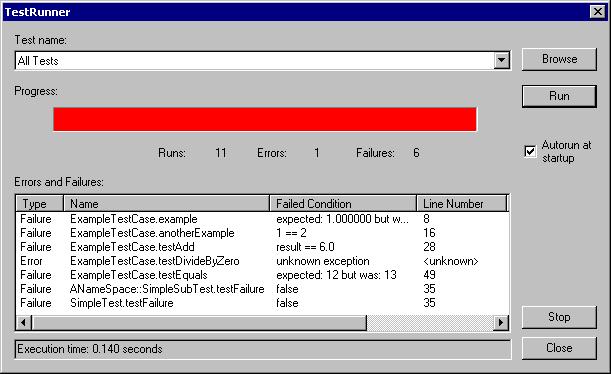

CppUnit test runner (from Cpp Unit, Windows Edition). I actually

used the QT GUI on Solaris at the time, but couldn’t find an image of it. Click

for the original image.

In the end, my new subsystem improved performance by a factor of 18x and saved the project. And when a bug or two cropped up, I could investigate, reproduce, fix, validate, and ship a new version the same day—not the next week, or the next month.

I wish I could say I was well trained or mentored, or that the culture encouraged my development. The truth is I was entirely self-motivated. Although my teammates recognized my abilities, they never adopted my practices, despite their obvious impact. I couldn’t figure out why.

So I put my house on the market, quit my job, and met a woman on an online dating site whose referral led to me joining Google in 2005. In that order.

Google: Testing Grouplet

2005-2010, mike-bland.com/the-rainbow-of-death

At Google I joined the Testing Grouplet, a team of volunteers dedicated to driving automated testing adoption. My talk “The Rainbow of Death” tells the five year story of the Grouplet. I’ll give you the fast forwarded version of that talk right meow.6

-

Rapid growth, hiring the “best of the best,” build/test tools not scaling

When I joined in 2005, the company was growing fast,7 and we knew we were “the best of the best.” However, our build and testing tools and infrastructure weren’t keeping up. -

Lack of widespread, effective automated testing and continuous integration; frequent broken builds and “emergency pushes” (deployments)

Developers weren’t writing nearly enough automated tests, the ones they wrote weren’t that good, and few projects used continuous integration. As a result, code frequently failed to compile, and errors that made it to production would frequently lead to “emergency pushes,” or deployments. -

Resistance: “I don’t have time to test,” “My code is too hard to test.”

We kept hearing that people didn’t have time to test, or that their code was too hard to test. -

Imposter syndrome, deadline pressure

Underneath that, we realized many suffered from imposter syndrome, while under intense deadline pressure. -

(Mostly) smart people who hadn’t seen a different way

Basically, these were (mostly) smart people who didn’t know what they didn’t know, and couldn’t afford to stop and learn everything at once.

We had to identify how to get the right knowledge and practices to spread over time.

Geoffrey A. Moore, Crossing the Chasm, 3rd Edition

Different people embrace change at different times

The “Crossing the Chasm” model from Geoffrey Moore’s book of the same name helps to make sense of our dilemma.8 At a high level, it illustrates how different segments of a population respond to a particular innovation.

-

Innovators and Early Adopters are like-minded seekers, enthusiasts and visionaries who together bring an innovation to the market and lead people to adopt it. I like to lump them together and call them Instigators.

-

The Early Majority are pragmatists who are open to the new innovation, but require that it be accessible and ready to use before adopting it.

-

The Late Majority are followers waiting to see whether or not the innovation works for the Early Majority before adopting it.

-

Laggards are the resisters who feel threatened by the innovation in some way and complain about it the most. They may potentially raise valid concerns, but often they only bluster to rationalize sticking with the status quo.9

The Instigators face the challenge of bringing an innovation across The Chasm separating them from the Early Majority, developing what Moore calls The Total Product. Developing the Total Product requires that the Instigators identify and fulfill several needs the Early Majority has in order to facilitate adoption.

As Instigators, the Testing Grouplet focused its energy on connecting with other Instigators and the early Early Majority to deliver the Total Product. We largely ignored the highly vocal Laggards.10

The Rainbow of Death

mike-bland.com/the-rainbow-of-death

This connection across the chasm isn’t part of the original Chasm model, but one I borrowed from a friend11 and called “The Rainbow of Death.” It helps illustrate those Early Majority needs the Instigators must satisfy. Doing so transforms the Early Majority from being dependent on the Instigators’ expertise into independent experts themselves.

Five years of chaos…

…and one Rainbow to rule them all

I’ll now use the Rainbow of Death to show how the Testing Grouplet eventually brought that Total Product across the Chasm.

[Note that the following steps are animated in the actual presentation, filling in the Rainbow of Death graphic one block at a time.]

-

Intervene + Empower: Of course there were already teams working to empower developers by improving development tools and infrastructure. But as you can see, there’s still a large gap between delivering tools and helping people use them well.

-

Mentor: That’s where the Testing Grouplet stepped in.

-

Inform: We started by training new hires, writing “Codelab” online training modules, hosting Tech Talks, and giving out tons of free books. But we noticed people weren’t necessarily reading those books, or otherwise applying the knowledge we were sharing. So we transformed from a “book club” to an “activist group.”12

-

Inspire: We shared the Google Web Server success story…

-

Validate: …then distilled that experience into the Test Certified roadmap program. This program was comprised of three levels containing several tasks each. It removed friction and pressure for teams by providing a starting point and path for focusing on one improvement at a time.

-

Mentor: We also offered volunteer “Mentors” to guide teams through the process and celebrate success…

-

Inspire: …and physical, glowing “build orbs” to monitor their build status.

-

Intervene: We eventually built the Test Mercenaries internal consulting team to work with more challenging projects on climbing the “TC Ladder.”

-

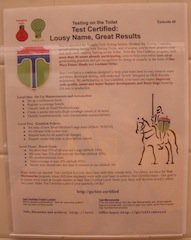

Inform: And our biggest hit was our Testing on the Toilet newsletter, appearing weekly in every company bathroom.

-

Inspire: We eventually focused on getting every team to operate at Test Certified Level Three, whether they were officially enrolled or not.

-

Inspire: All of this was punctuated by four “Fixits,” companywide events to address “important but not urgent” issues. Our Fixits inspired people to write and fix tests…

-

Empower: …to adopt new build tools,13 and finally…

-

Empower: …to adopt the Test Automation Platform continuous integration system.

These efforts made quality work and its impact more visible than it had been. This helped people write better tests, adopt better testing practices and strategies, drastically improve build and test times, reduce bugs, and increase productivity. But perhaps the most visible result was scalability of the organization.

Google: Testing Grouplet results

2015, R. Potvin, Why Google Stores Bills. of LoC in a Single Repo

Rachel Potvin presented the following results in her presentation from @Scale 2015, “Why Google Stores Billions of Lines of Code in a Single Repository.” They may seem quaint to Googlers today, but they speak to the Testing Grouplet’s enduring impact five years after the TAP Fixit.

- 15 million LoC in 250K files changed by humans per week

- 15K commits by humans, 30K commits by automated systems per day

- 800K/second peak file requests

Of course, the Testing Grouplet isn’t responsible for all of this; Rachel’s talk describes an entire ecosystem of tools and practices. Even so, she states very clearly that:

- “TAP is our automated test infrastructure, without which this model would completely fall apart.” (13m:36s)

Also, it may amuse you to know that Testing on the Toilet, started in 2006, continues to this day!14

original image source

This isn’t a recent episode—it’s from around 2007, as I recall—but it’s from my

Testing on the Toilet blog post. I also just happened write this one, which

touches on Test Certified and the Test Mercenaries as well.

Click for a larger image.

One more time…

After the Testing Grouplet succeeded, I worked on websearch indexing for a couple of years. Then I burned out, dropped out, and tried the music thing again. It obviously didn’t really work out, or I wouldn’t be speaking to you now.

Berklee College of Music, 2013.

Click for a larger image.

Apple’s goto fail

Finding More Than One Worm in the Apple, CACM, July 2014

The beginning of my descent back into the tech industry began in February 2014, thanks to Apple’s famous “goto fail” bug.

| Requirement | Apply algorithm multiple times |

|---|---|

| Assumption | Short algorithms safe to copy |

| Reality | Copies may not stay identical |

| Outcome | One of six copies had a bug |

| Impact | Billions of devices |

Requirement: Apple had to update part of its open source Secure Transport component which applied the same algorithm in six places.

Assumption: The developers apparently assumed that this short, ten line algorithm was safe to copy in its entirety, instead of making it a function.

Reality: One problem with duplication is that the copies may not remain identical.

Outcome: As it so happened, one of the six copies of this algorithm picked up an extra “goto” statement that short circuited a security handshake.

Impact: Once it was discovered and patched, Apple had to push an emergency update to billions of devices. It’s unknown whether it was ever exploited.

The complexity produced by copying and pasting nearly-but-not-quite-identical code yielded poor quality that masked a horrific defect. My article “Finding More Than One Worm in the Apple” explains how this bug could’ve been caught, or prevented, by a unit test.

OpenSSL’s Heartbleed

Goto Fail, Heartbleed, and Unit Testing Culture, May 2014

| Requirement | Echo message from request |

|---|---|

| Assumption | User-supplied length is valid |

| Reality | Actual message may be empty |

| Outcome | Server returns arbitrary data |

| Impact | Countless HTTPS servers |

Requirement: In April 2014, OpenSSL had to update its “heartbeat” feature, which echoed a message supplied by a user request.

Assumption: The code assumed that the user supplied message length matched the actual message length.

Reality: In fact, the message could be completely empty.

Outcome: In that case, the server would hand back however many bytes of its own memory that the user requested, including secret key data.

Impact: Countless HTTPS servers had to be patched. It’s unknown whether it was ever exploited.

My article “Goto Fail, Heartbleed, and Unit Testing Culture” explains how this bug could’ve been caught, or prevented, by a unit test. It describes how the absence of a rigorous testing culture allowed a fundamentally flawed assumption to endanger the privacy and safety of millions. It also shows how to challenge such fundamental assumptions and to prevent them from compromising complex systems through unit testing specifically.

Apple: Quality Culture Initiative

2018-present

Shortly after that, I was lured back into technology and eventually ended up at Apple in November 2018, which I left in November 2022. At Apple, I joined forces with a few others15 to start the Quality Culture Initiative, another volunteer group inspired by the Testing Grouplet.

-

Rapid growth, hiring the “best of the best,” build/test tools not scaling

When I joined Apple in 2018, the company was growing fast, and we knew we were “the best of the best.” However, our build and testing tools and infrastructure weren’t keeping up. -

Widespread automated and manual testing, but…

There was a strong testing culture, but not around unit testing. -

“Testing like a user would” often considered most important

With so much emphasis on the end user experience, many believed that “testing like a user would” was the most important kind of testing. -

Tests often large, UI-driven, expensive, slow, flaky, and ineffective

As a result, most tests were user interface driven, requiring full application and system builds on real devices. Since writing smaller tests wasn’t often considered, this led to a proliferation of large, expensive, slow, unreliable, and ineffective tests, generating waste and risk. -

“We’re the best” syndrome, deadline pressure

Rather than imposter syndrome, there was strong sense that we were already the best. -

(Mostly) smart people who hadn’t seen a different way

This led to a lot of (mostly) smart people suffering because not enough of them even knew that better methods of improving quality existed.

The End of the Rainbow

Too much of a good thing, way too soon

In the beginning, I made the mistake of thinking the Rainbow of Death could help us accelerate adoption. I kept trying to use it as an answer key. I’d expect these “smart people” to “get it,” shortcut the exploration phase, get straight to implementation, and shave years off the process. However, I eventually realized that it’s too complicated a device to apply at the beginning of the change process.16

Instigating Culture Change

Essential needs an internal community must support

So instead, we focused on these essential needs to simplify our initial efforts:17

- Individual Skill Development

- Team/Organizational Alignment

- Quality Work/Results Visibility

Each part of the cycle gains momentum from the others,18 but it’s important to focus on completing one effort before launching the next.

Focus and Simplify

Build a solid foundation before launching multiple programs

Looking back, this is how the Testing Grouplet built up its efforts one step at a time, over time. We did try many things, some in parallel, but we tended to establish one major program at a time before focusing on establishing another.19

At Apple, I started off trying to use the Rainbow of Death to get too many projects started at once. We didn’t make much progress for about a year.20 Once I realized my mistake and confessed it to the Quality Culture Initiative, everyone agreed that we needed to focus and simplify our efforts.

-

Skill Development: Complete training curriculum and volunteer training staff

First, we launched a complete training curriculum with an all-volunteer training staff. -

Alignment/Visibility: Internal podcast focused on producing regular episodes

Our internal podcast team then got serious about publishing episodes more regularly. -

Alignment/Visibility: Quality Quest in one org, then spread to others via QCI

While I was focused on those programs, another core QCI member established the QCI’s version of Test Certified, Quality Quest, in his organization. We then merged Quality Quest back into the QCI mainstream, allowing it to spread to other organizations.

After that, we began experimenting again with other projects, some sticking, some not so much. Whenever a project seemed to stall, we’d invoke our “focus and simplify” mantra and pour that focus into more productive areas.

Apple: Quality Culture Initiative results

QCI activity as of November 2022—internal results confidential

It’s too early for the QCI to declare victory, and specific results to date are confidential. However, I can broadly describe the state of the QCI’s efforts by the time I left Apple in November 2022.

-

Training: 16 courses, ~40 volunteer trainers, ~360 sessions, ~6100 check-ins, ~3200 unique individuals

Our training program was wildly successful, with sixteen courses and dozens of volunteer trainers helping thousands of attendees improve their coding and testing capabilities. -

Internal podcast: 45 episodes and 500+ subscribers

Our podcast series gave a voice to people of various roles from various organizations, helping drive a rich software quality conversation across Apple. -

Quality Quest roadmap: ~80 teams, ~20 volunteer guides

Our Quality Quest roadmap, directly inspired by Test Certified, started helping teams across Apple improve their quality practices and outcomes. -

QCI Ambassadors: 6 organizations started, 6 on the way

QCI Ambassadors now use these resources to help their organizations apply QCI principles, practices, and programs to achieve their quality goals. -

QCI Roadshow: over 50 presentations

The QCI Roadshow helps introduce QCI concepts and programs directly to groups across the company. -

QCI Summit: ~50 recorded sessions, ~60 presenters, ~850 virtual attendees

Our QCI Summit event recruited presenters from across Apple to make their quality work and impact visible. We saw how QCI principles applied to operating systems and services, applications, frontends and backends, machine learning, internal IT, and development infrastructure.

What’s in a name?

What we realized three years after choosing it

One nice feature about the name “Quality Culture Initiative” that we didn’t realize for three years was how it encoded the total Software Quality solution:

-

Quality is the outcome we’re working to achieve, but as I’ll explain, achieving lasting improvements requires influencing the…

-

Culture. Culture, however, is the result of complex interactions between individuals over time. Any effective attempt at influencing culture rests upon systems thinking, followed by taking…

-

Initiative to secure widespread buy in for systemic changes. Selling a vision for systemic improvement and supporting people in pursuit of that vision requires leadership.

There’s a lot to unpack when it comes to leading a culture change to make software quality visible.

If you end up itching for more information than I can provide during our time today…

For a lot more detail:

mike-bland.com/making-software-quality-visible

…follow this link to view the slides and script. Both have extensive footnotes that go into a lot more detail.

Skills Development, Alignment, and Visibility

The objective of individual skill development, team and organizational alignment, and visibility of quality work and its results is to help everyone make better choices.

Quality begins with individual choices.

Quality begins with the choices each of us make as individuals throughout our day.

Awareness of principles and practices improves our choices.

Awareness of sound quality principles and practices improves the quality of these choices.

Common language makes principles and practices visible—improving everyone’s choices.

Developing a common language makes these principles and practices visible, so we can show them to one another, helping raise everyone’s quality game.

Individual Skill Acquisition

Help individuals incorporate principles, practices, language

Therefore, helping individuals acquire new knowledge, language, and skills is essential to improving software quality.

- Training, documentation, internal media, mentorship, sharing examples

We can offer training, documentation, and other internal media to spread awareness. We can also offer direct mentorship or share examples from our own experience to help others learn.

While QA testers, project managers, executives, and other stakeholders should be included in this process, my specialty is focusing on developers. Straightforward programming errors comprise the vast majority of quality issues; they’re also generally the most preventable and easily fixable. Helping developers write high quality code and tests is the fastest, cheapest, and most sustainable way to catch, resolve, and even prevent most programming errors.

Here I’ll summarize a few key principles and practices to help developers do exactly that.

-

Testable code/architecture is maintainable—tests add design pressure, enable continuous refactoring; use code coverage as a tool, not a goal

Designing code for testability, given proper guidance on principles and techniques, adds design pressure that yields higher quality code in general. Having good tests then enables constant improvements to code quality through continuous refactoring, instead of stopping the world for complex, risky overhauls or rewrites.21Good tests also enable developers to use code coverage as a tool while refactoring, helping ensure new and improved code replaces the previous code.22

-

Stop copy/pasting code; send several small code reviews, not one big one

Two common habits that contribute to worse code quality are duplicating code23 and submitting large changes for review.24 These changes make code difficult to read, test, review, and understand, which hides bugs and makes them difficult to find and fix after they’ve shipped. Helping people write testable code also helps people break these costly bad habits. -

Tests should be designed to fail: naming and organization can clarify intent and cause of failure; use Arrange-Act-Assert (a.k.a. Given-When-Then)

The goal of testing isn’t to make sure tests always pass no matter what. The goal is to write tests that let us know, reliably and accurately, when our expectations of the code’s behavior differ from reality. Therefore, we should apply as much care to the design, naming, organization of our tests as we do to our production code.25Merely showing people the immediately graspable Arrange-Act-Assert (or Given-When-Then) pattern can be a profound revelation that changes their perspective forever.

-

Interfaces/seams enable composition, dependency breaking w/ test doubles

Of course, many of us start out in legacy code bases with few tests, if any.26 So we also need to teach how to make safe changes to existing code that enable us to begin improving code quality and adding tests. Michael Feathers’s Working Effectively with Legacy Code is the seminal tome on this subject, showing how to gently break dependencies to introduce seams. “Seams” are points at which we introduce abstract interfaces that enable test doubles to stand in for our dependencies, making tests faster and more reliable.27

Speaking of interfaces, Scott Meyers, of Effective C++ fame, gave perhaps the best design advice of all for writing testable, maintainable, understandable code in general:

“Make interfaces easy to use correctly and hard to use incorrectly.”

To propose a slight update to make it more concrete:

“Make interfaces easy to use correctly and hard to use incorrectly—like an electrical outlet.”

—With apologies to Scott Meyers, The Most Important Design Guideline?

I took this picture of an electrical outlet myself, because I couldn’t find a

I took this picture of an electrical outlet myself, because I couldn’t find a

Of course, it’s not impossible to misuse an electrical outlet, but it’s a common, wildly successful example that people use correctly most of the time.28 Making software that easy to use or change correctly and as hard to do so incorrectly may not always be possible—but we can always try.

Team/Organizational Alignment

Get everyone speaking the same language

Living up to that standard is a lot easier when the people you work with also consider it a priority.29 Here’s how to create the cultural space necessary for people to apply successfully the new insights, skills, and language we’ve discussed.

-

Internal media, roadmap programs, presentations, advocates

Use internal media like blogs and newsletters to start a conversation around software quality and to start developing a common language. Roadmap programs create a framework for that conversation by outlining specific improvements teams can adopt. Team and organizational presentations can rely on the quality language and roadmap to inspire an audience and make the concepts more memorable. Software quality advocates can then use all these mechanisms to drive progress. -

Understanding between devs, QA, project managers/owners, executives

Articulate how everyone plays a role in improving software quality, and get them all communicating with one another! An executive sponsor or project manager may not need to understand the fine details of dependency injection and test doubles. However, if they understand the Test Pyramid, they can hold developers and QA accountable for improving quality by implementing a balanced, reliable, efficient testing strategy. -

Focus and simplify! (Don’t swallow the elephant—leave some for later!)

This is a lot of work that will take a long time. Rather than getting overwhelmed or spreading oneself too thin trying to swallow the entire elephant, focus and simplify by delivering one piece at a time. -

Every earlier success lays a foundation and creates space for future effort

Delivering the first piece creates more space to deliver the second piece, then the third, and so on. -

Be agile—make plans, but recalibrate often

Of course, this process need not be strictly linear. It’s important to be clear about priorities and delivering pieces over time, but make adjustments as everyone gains experience and the conversation unfolds.30 -

Absorb influences like a musician/band—then create your own voice/style

Ultimately the process is a lot like helping one another grow as a musicians. It’s not about everyone doing exactly as they’re told. Everyone should be absorbing ideas, trying them out, gaining experience with them, and ultimately making them their own, part of their individual style. Then everyone can share what they’ve learned and discovered, enriching all of us further by adding their own voice to the ongoing conversation.

Roadmap Programs

Guidelines, language, conversation framework, examples

Let’s examine the value of roadmap programs more closely. They can provide the language and conversational framework for the entire program, as well as other powerful features.

-

Define beginning, middle, and end—break mental barrier of where to start

One of the most important features a roadmap provides is helping teams focus on getting started. It can help overcome the mental barrier of not knowing where to begin by helping to visualize the beginning, middle, and end of the journey. For this reason, I recommend organizing roadmaps into three phases, or “levels,” with four or five steps each. This helps break “analysis paralysis” by narrowing the options at each stage, and providing a rough order in which to implement them. -

Align dev, QA, project management, management, executives

A roadmap can help produce alignment across the various stakeholders in a project, making clear what will be done, by who, and in what order. Shared language and collective visibility encourage common understanding, open communication, and accountability. -

Recommend common solutions, but don’t force a prescription

A good roadmap won’t force specific solutions on every team, but will provide clear guidelines and concrete recommendations. Teams are free to find their own way to satisfy roadmap requirements, but most teams stand to benefit from recommendations based on others’ experience. -

Give space for conversation and experience to shape the way

The point of a roadmap isn’t to guarantee a certain outcome, or to constrain variations or growth. It’s to help teams communicate their intentions, coordinate their efforts, and adjust as necessary based on what they learn together throughout the process. -

Provide a framework to make effort and results visible, including Vital Signs

A roadmap helps teams focus, align, communicate, learn, and accomplish shared goals by making software quality work and its impact visible. It helps people talk about quality by giving it a local habitation and a name. Then it provides guidelines and recommendations on implementing Vital Signs that make quality efforts and outcomes as tangible as developing and shipping features. -

Can help other teams learn by example and follow the same path

Finally, a roadmap that makes software quality work and its results visible to one team can also make it visible to others. This visibility can inspire other teams to follow the same roadmap and learn from one another’s example. Once a critical mass of teams adopts a common roadmap, though the details may differ from team to team, the broader organizational culture evolves.

To continue the musical metaphor, roadmaps should act mainly as lead sheets that outline a tune’s melody and chords, not as note for note transcriptions. They provide a structure for exploring a creative space in harmony with other players, but leave a lot of room for interpretation and creativity. At the same time, studying transcriptions and recordings to learn the details of what worked for others is important to developing one’s own creativity.

Test Certified Roadmap

Foundation for QCI’s Quality Quest

original image source

Click for a larger image.

The Testing Grouplet’s Test Certified roadmap program was extremely effective at driving automated testing adoption and software quality improvements at Google. This image shows the program exactly as it was from about 2007 to 2011. It was small enough to fit comfortably, with further explanation, in a single Testing on the Toilet episode.

We designed the program to fit well with Google’s Objectives and Key Results (OKRs). It also sparked a very effective partnership with QA staff, who championed Test Certified as a means of collaborating more effectively with their client teams.31

The three levels are:

- Set Up Measurements and Automation: These tasks are focused on setting up build and test infrastructure to provide visibility and control, and are fairly quick to accomplish.

- Establish Policies (and early goals): With the infrastructure in place, the team can commit to a review, test, and submission policy to begin making improvements. Early goals, reachable within a month or two, provide just enough pressure to motivate a team-wide commitment to the policy.

- Reach (long-term) Goals: On this foundation of infrastructure, policy, and early wins, the team is in a position to stretch for longer term goals. These goals should be achievable within six months or more.

Quality Quest

…and Roadmap development

At Apple, I literally copied these criteria into a wiki page as the first draft of Quality Quest.32 I then asked QCI members what we’d need to change to fit Apple’s needs, and we landed upon:

- More integration with QA/manual/system testing, with a focus on striking a good balance with smaller tests.

- No specific percentages of test sizes, which were the most hotly debated part of Test Certified (other than the name). Instead, we specified that teams should set their own goals in Levels Two and Three.

- “Vital Signs” as a Level Three component, to encourage conversation and collaboration based on a suite of quality signals designed by all project stakeholders.

These programs did take time to design, to try out with early adopters, to incorporate feedback into, and to start spreading further. However, the time it took was a feature, not a bug. We were securing buy-in, avoiding potential resistance from people feeling that we were coercing them to conform to requirements they neither agreed with nor understood.

Also, while Quality Quest’s structure and goals were identical to Test Certified, we took liberties to adapt the program to our current situation. Much like writing a software program or system, we took the same basic structure and concepts and adapted them to our specific needs. Or, we took a good song and made our own arrangement, sang it in our own voice. Conversation, collaboration, patience, and persistence are essential to the process of developing an effective, sustainable, and successful in-house improvement program.

Quality Work and Results Visibility

Storytelling is essential to spreading language, leading change.

Great storytelling is essential to providing meaningful insight into quality outcomes and the work necessary to achieve them.33 This can happen throughout the process, but sharing outcomes, methods, and lessons learned is critical to driving adoption of improved practices and making them stick.

-

Media, roadmaps, presentations, events

In fact, good stories can drive alignment via the same media we discussed earlier. Organizing a special event every so often can generate a critical mass of focus and energy towards sharing stories from across the company. Such events can raise the company wide software quality improvement effort to a new plateau. They also help prove that common principles and practices apply across projects, no matter the tech stack or domain, refuting the Snowflake Fallacy. -

Make a strong point with a strong narrative arc

The key to telling a good story is adhering a strong narrative arc.34 Here are three essential elements:-

Show the results up front—share your Vital Signs!

First, don’t bury the lede!35 We’re not trying to hook people on solving a mystery, we’re trying to hook people on the value of what we’re about to share. This holds whether you’ve already achieved compelling outcomes or if you’re still in the middle of the story and haven’t yet achieved your goals. In the latter case, you can still paint a compelling picture of what you’re trying to achieve, and why.Either way, having meaningful Vital Signs in place can make telling this part of the story relatively straightforward.

-

Describe the work done to achieve them, and why

Next, tell them what you had to do (or are trying to do now) to achieve these outcomes and what you learned while doing it. Don’t just give a laundry list of details, however.- Practices need principles! The mindset is more portable than the

details.

Practices need principles.36 Help people understand why you applied specific practices—show how they demonstrate the mindset37 that’s ultimately necessary to improve software quality. Technical details can be useful to make the principles concrete, but they’re ultimately of secondary importance to having the right mindset regardless of the technology.

- Practices need principles! The mindset is more portable than the

details.

-

Make a call to action to apply the information

Finally, give people something to do with all the information you just shared. Tell them how they can follow up with you or others, via email or Slack or whatever. Provide links to documentation or other resources where they can learn more about how to apply the same tools and methods on their own.

-

Building a Software Quality Culture

Cultivating resources to support buy-in

| Resources | Skills | Alignment | Visibility |

|---|---|---|---|

| Training | ✅ | ||

| Documentation | ✅ | ||

| Internal media e.g., blogs, newsletters |

✅ | ✅ | ✅ |

| Roadmap program | ✅ | ✅ | |

| Vision/strategy presentations | ✅ | ✅ | |

| Mentors/advocates | ✅ | ✅ | ✅ |

| Internal events | ✅ | ✅ | ✅ |

Here we can see how different resources serve to fulfill one or more of the essential needs for organizational change. There’s no specific order in which to build up these resources—it’s up to you to decide where to focus at each stage in your journey.

Mapping the Testing Grouplet and Quality Culture Initiative activities onto this table reveals how the same basic resources apply across vastly different company cultures.

Google Testing Grouplet

2005-2010

| Resources | Examples |

|---|---|

| Training | Noogler (New Googler) Training, Codelabs |

| Documentation | Internal wiki |

| Internal media e.g., blogs, newsletters |

Testing on the Toilet |

| Roadmap program | Test Certified |

| Vision/strategy presentations | Google Web Server story |

| Mentors/advocates | Test Mercenaries |

| Internal events | Two Testing Fixits, Revolution Fixit, TAP Fixit |

At Google, we provided introductory unit testing training and Codelab sessions to new employees, or “Nooglers.” We made extensive use of the internal wiki, and of course Testing on the Toilet was our breakthrough documentation hit. TotT helped people to participate in the Test Certified program, which was based on the experience of the Google Web Server team. Then we scaled up our efforts by building the Test Mercenaries team and hosting four companywide Fixits over the years.

Apple Quality Culture Initiative

2018-present

| Resources | Examples |

|---|---|

| Training | 16-course curriculum for dev, QA, Project Managers |

| Documentation | Confluence |

| Internal media e.g., blogs, newsletters |

Quality Blog, internal podcast |

| Roadmap program | Quality Quest |

| Vision/strategy presentations | QCI Roadshow, official internal presentation series |

| Mentors/advocates | QCI Ambassadors |

| Internal events | QCI Summit |

At Apple, we knew that posting flyers in Apple Park bathrooms wouldn’t fly, but our extensive Training curriculum was wildly successful. We also made extensive use of our internal Confluence wiki, maintained a Quality Blog, and had a ton of fun producing our own internal podcast. Quality Quest was directly inspired by Test Certified, but adapted by the QCI community to better serve Apple’s needs.38 We promoted our resources via dozens of QCI Roadshow presentations for specific teams and groups, as well as a few official, high visibility internal presentations. We recruited QCI Ambassadors from different organizations to help translate general QCI resources and principles to fit the needs of specific orgs. Finally, we organized a QCI Summit promote software quality stories from across the company, demonstrating how the Quality Mindset applies regardless of domain.

This comparison raises an important point that I’ve made in response to a common question:

“What are the important differences between companies?”

“What are the differences between companies?” with the assumption or implication that the differences are of key importance. Reflecting upon this just before leaving Apple, I realized…

The superficial details may differ…

…of course there are obvious differences. Google’s internal culture was much more open by default, and people back in the day had twenty percent of their time to experiment internally. Apple’s internal culture isn’t quite as open, and people are held accountable to tight deadlines. Even so…

…but they’re more alike than different.

…the companies are more alike than they might first seem. Both are large organizations composed of the same stuff, namely humans striving for both individual and collective achievement. Much like code from different projects, at the molecular level, they’re more alike than they are different.

Over time, I’ve come to appreciate these similarities as being ultimately more important than the differences. The same essential issues emerge, and the same essential solutions apply, differing only in their surface appearances. So no matter what project you’re on, or what company you work for, everybody everywhere is dealing with the same core problems. Not even the biggest of companies is immune, or otherwise special or perfect.

The Test Pyramid and Vital Signs

Two important concepts for making software quality itself actually visible at a fundamental level are The Test Pyramid and Vital Signs.

First, let’s understand the specific problems we intend to solve by making software quality visible and improving it in an efficient, sustainable way.

Working back from the desired experience

Inspired by Steve Jobs Insult Response

In this famous Steve Jobs video, he explains the need to work backward from the customer experience, not forward from the technology. So let’s compare the experience we want ourselves and others to have with our software to the experience many of us may have today.

| What we want | What we have |

|---|---|

| Delight | Suffering |

| Efficiency | Waste |

| Confidence | Risk |

| Clarity | Complexity |

-

What we want

We want to experience Delight from using and working on high quality software,39 which largely results from the Efficiency high quality software enables. Efficiency comes from the Confidence that the software is in good shape, which arises from the Clarity the developers have about system behavior. -

What we have

However, we often experience Suffering from using or working on low quality software, reflecting a Waste of excess time and energy spent dealing with it. This Waste is the result of unmanaged Risk leading to lots of bugs and unplanned work. Bugs, unplanned work, Risk, and fear take over when the system’s Complexity makes it difficult for developers to fully understand the effect of new changes.

Difficulty in understanding changes produces drag— i.e., Technical debt.

Difficulty in understanding how new changes could affect the system is the telltale sign of low internal quality, which drags down overall quality and productivity. The difference between actual and potential productivity, relative to internal quality, is technical debt.

This contributes to the common scenario of a crisis emerging…

Replace heroics with a Chain Reaction!

…that requires technical heroics and personal sacrifice to avert catastrophe. To get a handle on avoiding such situations, we need to create the conditions for a positive Chain Reaction.

By creating and maintaining the right conditions over time, we can achieve our desired outcomes without stress and heroics.

The main obstacle to replacing heroics with a Chain Reaction isn’t technology…

The challenge is belief—not technology

A little awareness goes a long way

…it’s an absence of awareness or belief that a better way exists.40,41

-

Many of these problems have been solved for decades

Despite the fact that many quality and testing problems have been solved for decades…42 -

Many just haven’t seen the solutions, or seen them done well…

…many still haven’t seen those solutions, or seen them done well.43 -

The right way can seem easy and obvious—after someone shows you!

The good news is that these solutions can seem easy and obvious—after they’ve been clearly explained and demonstrated.44 -

What does the right way look like?

So how do we get started showing people what the right way to improve software quality looks like?

The Test Pyramid

A balance of tests of different sizes for different purposes

We’ll start with the Test Pyramid model,45,46 which represents a balance of tests of different sizes for different purposes.

Realizing that tests can come in more than one size is often a major revelation to people who haven’t yet been exposed to the concept.47 It’s not a perfect model—no model is—but it’s an effective tool for pulling people into a productive conversation about testing strategies for the first time.48

(The same information as above, but in a scrollable HTML table:)

| Size | Scope | Ownership | Code visibility |

Dependencies | Control/ Reliability/ Independence | Resource usage/ Maint. cost | Speed/ Feedback loop | Confidence |

|---|---|---|---|---|---|---|---|---|

| Large (System, E2E) |

Entire system |

QA, some developers |

Details not visible | All | Low | High | Slow | Entire system |

| Medium (Integration) |

Components, services | Developers, some QA | Some details visible | As few as possible | Medium | Medium | Faster | Contract between components |

| Small (Unit) |

Functions, classes | Developers | All details visible | Few to none | High | Low | Fastest | Low level details, individual changes |

The Test Pyramid helps us understand how different kinds of tests give us confidence in different levels and properties of the system.49 It can also help us break the habit of writing large, expensive, flaky tests by default.50

-

Small tests are unit tests that validate only a few functions or classes at a time with very few dependencies, if any. They often use test doubles51 in place of production dependencies to control the environment, making the tests very fast, independent, reliable, and cheap to maintain. Their tight feedback loop52 enables developers to detect and repair problems very quickly that would be more difficult and expensive to detect with larger tests. They can also be run in local and virtualized environments and can be parallelized.

-

Medium tests are integration tests that validate contracts and interactions with external dependencies or larger internal components of the system. While not as fast or cheap as small tests, by focusing on only a few dependencies, developers or QA can still run them somewhat frequently. They detect specific integration problems and unexpected external changes that small tests can’t, and can do so more quickly and cheaply than large system tests. Paired with good internal design, these tests can ensure that test doubles used in small tests remain faithful to production behavior.53

-

Large tests are full, end to end system tests, often driven through user interface automation or a REST API. They’re the slowest and most expensive tests to write, run, and maintain, and can be notoriously unreliable. For these reasons, writing large tests by default for everything is especially problematic. However, when well designed and balanced with smaller tests, they cover important use cases and user experience factors that aren’t covered by the smaller tests.

Thoughtful, balanced strategy == Reliability, efficiency

Each test size validates different properties that would be difficult or impossible to validate using other kinds of tests. Adopting a balanced testing strategy that incorporates tests of all sizes54 enables more reliable and efficient development and testing—and higher software quality, inside and out.

Inverted Test Pyramid

Many larger tests, few smaller tests

Of course, many projects have a testing strategy that resembles an inverted Test Pyramid, with too many larger tests and not enough smaller tests.

This leads to a number of common problems:

-

Tests tend to be larger, slower, less reliable

The tests are slower and less reliable than they could be compared to relying more on smaller tests. -

Broad scope makes failures difficult to diagnose

Because large tests execute so much code, it might not be easy to tell what caused a failure. -

Greater context switching cost to diagnose/repair failure

That means developers have to interrupt their current work to spend significant time and effort diagnosing and fixing any failures. -

Many new changes aren’t specifically tested because “time”

Since most of the tests are large and slow, this incentivizes developers to possibly skip writing or running them because they “don’t have time.” -

People ignore entire signal due to flakiness…

Worst of all, since large tests are more prone to be flaky,55 people will begin to ignore test failures in general. They won’t believe their changes cause any failures, since the tests were failing before—they might even be flagged as “known failures.”56 And as I mention elsewhere… -

…fostering the Normalization of Deviance

…the Space Shuttle Challenger’s O-rings suffered from “known failures” as well, cultivating the “Normalization of Deviance” that led to disaster.

Causes

Let’s go over some of the reasons behind this situation.

-

Features prioritized over internal quality/tech debt

People are often pressured to continue working on new features that are “good enough” instead of reducing technical debt. This may be especially true for organizations that set aggressive deadlines and/or demand frequent live demonstrations.57 -

“Testing like a user would” is more important

Again, if “testing like a user would” is valued more than other kinds of testing, then most tests will be large and user interface-driven. -

Reliance on more tools, QA, or infrastructure (Arms Race)

This also tends to instill the mindset that the testing strategy isn’t a problem, but that we always need more tools, infrastructure, or QA headcount. I call this the “Arms Race” mindset. -

Landing more, larger changes at once because “time”

Because the existing development and testing process is slow and inefficient, individuals try to optimize their productivity by integrating large changes at once. These changes are unlikely to receive either sufficient testing or sufficient code review, increasing the risk of bugs slipping through. It also increases the chance of large test failures that aren’t understood. The team is inclined to tolerate these failures, because there isn’t “time” to go back and redo the change the right way. -

Lack of exposure to good examples or effective advocates

As mentioned before, many people haven’t actually witnessed or experienced good testing practices before, and no one is advocating for them. This instills the belief that the current strategy and practices are the best we can come up with. -

We tend to focus on what we directly control—and what management cares about! (Groupthink)

In such high stress situations, it’s human nature to focus on doing what seems directly within our control in order to cope. Alternatively, we tend to prioritize what our management cares about, since they have leverage over our livelihood and career development. It’s hard to break out of a bad situation when feeling cornered—and too easy to succumb to Groupthink without realizing it.

So how do we break out of this corner—or help others to do so?

Quality work can be hard to see. It’s hard to value what can’t be seen—or to do much of anything about it.

We have to overcome the fundamental challenge of helping people see what internal quality looks like. We have to help developers, QA, managers, and executives care about it and to resist the Normalization of Deviance and Groupthink. We need to better show our quality work to help one another improve internal quality and break free from the Arms Race mindset.

In other words, internal quality work and its impact is a lot like The Matrix…

“Unfortunately, no one can be told what the Matrix is. You have to see it for yourself.”

—Morpheus, The Matrix

One way to start showing people The Matrix is to get buy-in on a set of…

Vital Signs

…“Vital Signs.” Vital Signs are a collection of signals designed by a team to reflect quality and productivity and to rapidly diagnose and resolve problems.58

Intent

-

Comprehensive and make sense to the team and all stakeholders.

They should be comprehensive and make sense at a high level to everyone involved in the project, regardless of role. -

Not merely metrics, goals, or data

We’re not collecting them for the sake of saying we collect them, or to hit a goal one time and declare victory.59 -

Information for repeated evaluation

We’re collecting them because we need to evaluate and understand the state of our quality and productivity over time. -

Inform decisions whether or not to act in response

These evaluations will inform decisions regarding how to maintain the health of the system at any moment.

Common elements

Some common signals include:

-

Pass/fail rate of continuous integration system

The tests should almost always pass, but failures should be meaningful and fixed immediately. -

Size, build and running time, and stability of small/medium/large test suites

The faster and more stable the tests, the fewer resources they consume, and the more valuable they are. -

Size of changes submitted for code review and review completion times

Individual changes should be relatively small, and thus easier and faster to review. -

Code coverage from small to medium-small test suites

Each small-ish test should cover only a few functions or classes, but the overall coverage of the suite should be as high as possible.60 -

Passing use cases covered by medium-large to large and manual test suites

For larger tests, we’re concerned about whether higher level contracts, use cases, or experience factors are clearly defined and satisfied before shipping. -

Number of outstanding software defects and Mean Time to Resolve

Tracking outstanding bugs is a very common and important Vital Sign. If you want to take it to the next level, you can also begin to track the Mean Time to Resolve61 these bugs. The lower the time, the healthier the system.

Most of these specific signals aim to reveal how slow or tight the feedback loops are throughout the development process.62 Even high code coverage from small tests implies that developers can make changes faster and more safely. Well scoped use cases can lead to more reliable, performant, and useful larger tests.

Other potentially meaningful signals

Some other potentially meaningful signals include…

-

Static analysis findings (e.g., complexity, nesting depth, function/class sizes)

Popular source control platforms, such as GitHub, can incorporate static analysis findings directly into code reviews as well. This encourages developers to address findings before they land in a static analysis platform report.63 -

Dependency fan-out

Dependencies contribute to system and test complexity, which contribute to build and test times. Cutting unnecessary dependencies and better managing necessary ones can yield immediate, substantial savings. -

Power, performance, latency

These user experience signals aren’t caught by traditional automated tests that evaluate logical correctness, but are important to monitor. -

Anything else the team finds useful for its purposes

As long as it’s a clear signal that’s meaningful to the team, include it in the Vital Signs portfolio.

Use them much like production telemetry

Treat Vital Signs like you would any production telemetry that you might already have.

-

Keep them current and make sure the team pays attention to them.

-

Clearly define acceptable levels—then achieve and maintain them.

-

Identify and respond to anomalies before urgent issues arise.

-

Encourage continuous improvement—to increase productivity and resilience.

-

Use them to tell the story of the system’s health and team culture.

Example usage: issues, potential causes (not exhaustive!)

Here are a few hypothetical examples of how Vital Signs can help your team identify and respond to issues.

-

Builds 100% passing, high unit test coverage, but high software defects

If your builds and code coverage are in good shape, but you’re still finding bugs…- Maybe gaps in medium-to-large test coverage, poorly written unit

tests

…it could be that you need more larger tests. Or, it could be your unit tests aren’t as good as you think, executing code for coverage but not rigorously validating the results.

- Maybe gaps in medium-to-large test coverage, poorly written unit

tests

-

Low software defects, but schedule slipping anyway

If you don’t have many bugs, but productivity still seems to be dragging…- Large changes, slow reviews, slow builds+tests, high dependency fan

out

…maybe people are still sending huge changes to one another for review. Or maybe your build and test times are too slow, possibly due to excess dependencies.

- Large changes, slow reviews, slow builds+tests, high dependency fan

out

-

Good, stable, fast tests, few software defects, but poor app performance

Maybe builds and tests are fine, and there are few if any bugs, but the app isn’t passing performance benchmarks.- Discover and optimize bottlenecks—easier with great testing already in

place!

In that case, your investment in quality practices has paid off! You can rigorously pursue optimizations, without the fear that you’ll unknowingly break behavior.

- Discover and optimize bottlenecks—easier with great testing already in

place!

Getting started, one small step at a time

Here are a few guidelines for getting started collecting Vital Signs. First and foremost…

-

Don’t get hung up on having the perfect tool or automation first.

Do not get hung up on thinking you need special tools or automation at the beginning. You may need to put some kind of tool in place if you have no way to get a particular signal. But if you can, collect the information manually for now, instead of wasting time flying blind until someone else writes your dream tool. -

Start small, collecting what you can with tools at hand, building up over time.

You also don’t need to collect everything right now. Start collecting what you can, and plan to collect more over time. -

Focus on one goal at a time: lowest hanging fruit; biggest pain point; etc.

As for which Vital Signs to start with, that’s totally up to you and your team. You can start with the easiest signals, or the ones focused on your biggest pain points—it doesn’t matter. Decide on a priority and focus on that first. -

Update a spreadsheet or table every week or so—manually, if necessary.

If you don’t have an automated collection and reporting system handy, then use a humble spreadsheet or wiki table. Spend a few minutes every week updating it. -

Observe the early dynamics between the information and team practice.

Discuss these updates with your team, and see how it begins to shift the conversation—and the team’s behavior. -

Then make a case for tool/infrastructure investment based on that evidence.

Once you’ve got evidence of the value of these signals, then you can justify and secure an investment in automation.64

What Software Quality Is and Why It Matters

To advocate effectively for an investment in software quality, we need to define clearly what it is and why it’s so important.

Is High Quality Software Worth the Cost?

martinfowler.com/articles/is-quality-worth-cost.html

In May 2019, Martin Fowler published “Is High Quality Software Worth the Cost?,” a brief article describing the tradeoffs and benefits of software quality.

| Quality Type | Users | Developers |

|---|---|---|

| External | Makes happy |

Keeps productive |

| Internal | Keeps happy |

Makes productive |

He distinguished between:

-

External quality, which obviously makes users happy. This, in turn, keeps developers productive, since they don’t need to respond to problems reported by users. Then Martin argues that…

-

Internal quality helps keep users happy, by enabling developers to evolve the software easily and to resolve problems quickly. This is because high internal quality makes developers productive, since there’s less cruft and unnecessary complexity slowing them down from making changes.

Effects of quality on productivity over time

Martin also used this hypothetical graph, based on his experience, to illustrate the impact of quality tradeoffs over time.

-

With Low internal quality, progress is faster at the beginning, but begins to flatten out quickly.

-

With High internal quality, progress is slower at the beginning, but the investment pays off in greater productivity over time.

-

The break even point between the two approaches arrives within weeks, not months.

-

Though Martin’s original graph didn’t show this, the difference in productivity between low and high internal quality is one way to visualize technical debt.65

“Fast, cheap, or good: pick two three”

High quality software is cheaper to produce

Martin’s conclusion is that higher quality makes software cheaper to produce in the long run—that the “cost” of high quality software is negative. “Fast, cheap, or good: pick two” doesn’t hold as the system evolves. It may make sense at first to sacrifice good to get a cheaper product to market quickly. But over time, investing in “good” is necessary to continue delivering a product quickly and at a competitive cost.

Internal quality aids understanding

High quality software is cheaper because it’s easier to work with

Internal quality essentially helps developers continue to understand the system as it changes over time:66

-

Fosters productivity due to the clarity of the impact of changes

When they clearly understand the impact of their changes, they can maintain a rapid, productive pace. -

Prevents foreseeable issues, limits recovery time from others

Understanding helps them prevent many foreseeable issues, and resolve any bugs quickly and effectively. -

Provides a buffer for the unexpected, guards against cascading failures

These qualities help create a buffer for handling unexpected events,67 while also guarding against cascading failures. -

Your Admins/SREs will thank you! It helps them resolve prod issues faster.

Your system administrators or SREs will be very grateful for building such resilience into your system, as it helps their response times as well. -

Counterexamples: Global supply chain shocks; Southwest Airlines snafu

For counterexamples, recall the global supply chain shocks resulting from the COVID-19 pandemic, or the December 2022 Southwest Airlines snafu. These systems worked very efficiently in the midst of normal operating conditions. However, their intolerance for deviations from those conditions rendered them vulnerable to cascading failures. -

Quality, clarity, resilience are essential requirements of prod systems

Consequently, internal software quality, and the clarity and resilience it enables, are essential requirements of any production software system.

Focusing on internal software quality is good for business…because it’s the right thing to do.

As mentioned, Martin Fowler’s argument is that internal software quality is good for business—it’s such a compelling argument that I brought it up first. However, he prefers making only this economic argument for quality. He asserts that appeals to professionalism are moralistic and doomed, as they imply that quality comes at a cost.68

I disagree that we should sidestep appeals to professionalism entirely, and that they’re incompatible with the economic argument. I think it’s worth exploring why professionalism matters, both because it is moral and because customers increasingly expect high quality software they can trust.

Quality without function is useless—but function without quality is dangerous.

Put more bluntly, high quality may be useless without sufficient functionality, but as we’ll see, functionality without quality can be dangerous. Professionalism and morals only appear to come at a cost today. They’re actually investments that avoid more devastating future costs when a lack of focus on quality begins to impact users.

Remember the examples of unit testable bugs I described from my personal experience.

- Byte padding: USCG/USN Navigation

A byte padding mistake could’ve caused US Coast Guard and US Navy vessels to crash. - Goto Fail: macOS/iOS Security

An extra goto statement endangered secure communications on billions of devices. - Heartbleed: Secure Sockets Layer

Unchecked user input compromised secure sockets on the internet for years.

I caught the first bug, fortunately; but in the last two cases, a lack of internal quality and automated testing put many people at risk.

The point is that…

Quality Culture is ultimately Safety Culture

…a culture that values and invests in software quality is a Safety Culture. Society’s dependence on software to automate critical functions is only increasing. It’s our duty to uphold that public trust by cultivating a quality culture.

It’s ultimately what’s best for business and our own personal success as well.

Users don’t care about your profits.

They care if they can trust your product.

Users are the source of your profits, but they don’t care about your profits or your stock price. They care whether or not they can trust your product.

Why Software Quality Is Often Unappreciated and Sacrificed

We need to understand, if software quality is so important, why it’s so often unappreciated and sacrificed.

Automated (especially unit) testing

Why hasn’t it caught on everywhere yet?

- Apple, in January 1992, identified the need to make time for training,

documentation, code review—and unit testing!

At Apple, I found a document from January 1992 specifically identifying the need to make time for training, documentation, code review—and unit testing! That’s not just before Test-Driven Development and the Agile Manifesto, that’s before the World Wide Web!

There are a few reasons why unit testing in particular hasn’t caught on everywhere yet:69

-

People think it’s obvious and easy—therefore lower value

Many developers think it’s obvious and easy, and therefore can’t provide much value.70 -

Many still haven’t seen it done well—or may have seen it done poorly

Many others still haven’t seen it done well, or may have seen it done poorly, leading to the belief that it’s actually harmful. -

There’s always a learning curve involved

For those actually open to the idea, there’s still a learning curve, which they many not have the time to climb. -

Bad perf review if management doesn’t care about testing/internal quality

They may also fear spending time on testing and internal quality will result in a bad performance review if their management doesn’t care about it.71

Have you heard these ones before?

Common excuses for sacrificing unit testing and internal quality

Of course, people give their own reasons for not investing in testing and quality, including the following:

-

Tests don’t ship/internal quality isn’t visible to users (i.e., cost, not investment)

“We don’t ship tests, and users don’t care about internal quality.” Meaning, testing seems like a cost, not an investment. -

“Testing like a user would” is the most important kind of testing

As mentioned before, “Testing like a user would” is considered most important, so investing in smaller tests and internal quality seems unnecessary. -

Straw man: 100% code coverage is bullshit

The straw man that “writing tests to get 100% code coverage is bullshit.” This speaks to a fundamental ignorance about how to write good tests or to use coverage the right way. -

Straw man: Testing is a religion (implying: I’m better than those people)

For some reason, technical people, especially programmers, like to pound their chests as being against so called testing “religion” and those who practice it. It’s a flimsy excuse for trying to score social points by virtue signaling in view of one’s perceived peers. Framing a potentially reasonable discussion of different testing ideas in such a way only serves to shut it down for a superficial, unprofessional ego boost.72 -

“My code is too hard to test.” (The Snowflake Fallacy)

The common, evidence free “My code is too hard to test” assertion, which I call the Snowflake Fallacy. -

“I don’t have time to test.”

Finally, “I don’t have time to test.” This could be a brush off, or a genuine indication that they don’t know how and can’t spare the time to learn—and management doesn’t care.

Business as Usual