This twentieth post in the Making Software Quality Visible series explores common cultural traps that organizations fall into that lead to devastating consequences. At the very least, these traps produce an enormous amount of unnecessary complexity, risk, waste, and suffering while only making problems worse.

I’ll update the full Making Software Quality Visible presentation as this series progresses. Feel free to send me feedback, thoughts, or questions via email or by posting them on the LinkedIn announcement corresponding to this post.

Editorial note

I’ve moved the last slides from the “Why Software Quality Is Often Unappreciated and Sacrificed” section into the “Building a Software Quality Culture” section. These were the slides about not shaming people for doing Business as Usual, and helping them challenge and change it through asking good questions. They seem to fit better in this other section, and better set up the “Sell—don’t tell!” rationale.

Introduction

The previous post, Why Software Quality Is Often Unappreciated and Sacrificed, explained why people get used to unnecessary Complexity, Risk, Waste, and Suffering. They believe it’s just part of Business as Usual, which then allows the Normalization of Deviance to take hold.

Normalization of Deviance

Coined by Diane Vaughan in The Challenger Launch Decision

The explosion of the Space Shuttle Challenger shortly after takeoff on January

28, 1986 exposed the potentially deadly consequences of common organizational

failures.

Image from https://commons.wikimedia.org/wiki/File:Challenger_explosion.jpg.

In the public domain from NASA.

Diane Vaughan introduced this term in her book about the Space Shuttle Challenger explosion in January 1986.1 My paraphrased version of the definition is:

A gradual lowering of standards that becomes accepted, and even defended, as the cultural norm.

Space Shuttle Challenger Accident Report

history.nasa.gov/rogersrep/genindex.htm, Chapter VI, pp. 129-131

The key evidence of this phenomenon is articulated in chapter six of the Rogers commission report.2

-

The O-rings didn’t just fail on the Challenger mission on 1986-01-28…

Many of us may know that the O-rings lost elasticity in the cold weather, allowing gasses to escape which led to the explosion. -

…anomalies occurred in 17 of the 24 (70%) prior Space Shuttle missions…

However, you may not realize that NASA detected anomalies in O-ring performance in 17 of the previous 24 shuttle missions, a 70 percent failure rate. -

…and in 14 of the previous 17 (82%) since 1984-02-03

Even scarier, anomalies were detected in 14 of the previous 17 missions, for an 82% failure rate. -

Multiple layers of engineering, management, and safety programs failed

This wasn’t only one person’s fault—multiple layers of engineering, management, and safety programs failed.3 However, Normalization of Deviance isn’t the end of the problem.

NASA: NoD leads to Groupthink

Terry Wilcutt and Hal Bell of NASA delivered their presentation The Cost of Silence: Normalization of Deviance and Groupthink in November 2014.4 On the Normalization of Deviance, they noted that:

“There’s a natural human tendency to rationalize shortcuts under pressure, especially when nothing bad happens. The lack of bad outcomes can reinforce the ‘rightness’ of trusting past success instead of objectively assessing risk.”

—Terry Wilcutt and Hal Bell, The Cost of Silence: Normalization of Deviance and Groupthink

They go on to cite the definition of Groupthink from Irving Janis:

“[Groupthink is] a quick and easy way to refer to a mode of thinking that persons engage in when they are deeply involved in a cohesive in-group, when concurrence-seeking becomes so dominant that it tends to override critical thinking or realistic appraisal of alternative courses of action.”

—Irving Janis, Groupthink: psychological studies of policy decisions and fiascoes

NASA: Symptoms of Groupthink

They then describe the symptoms of Groupthink:5

- Illusion of invulnerability—because we’re the best!

- Belief in Inherent Morality of the Group—we can do no wrong!

- Collective Rationalization—it’s gonna be fine!

- Out-Group Stereotypes—don’t be one of those people!

- Self-Censorship—don’t rock the boat!

- Illusion of Unanimity—everyone goes along to get along!

- Direct Pressure on Dissenters—because everyone else agrees!

- Self-Appointed Mindguards—decision makers exclude subject matter experts from the conversation.

Any of these sound familiar? Hopefully from past, not current, experiences.

A common result of Groupthink is the well known systems thinking phenomenon called “The Cobra Effect.”

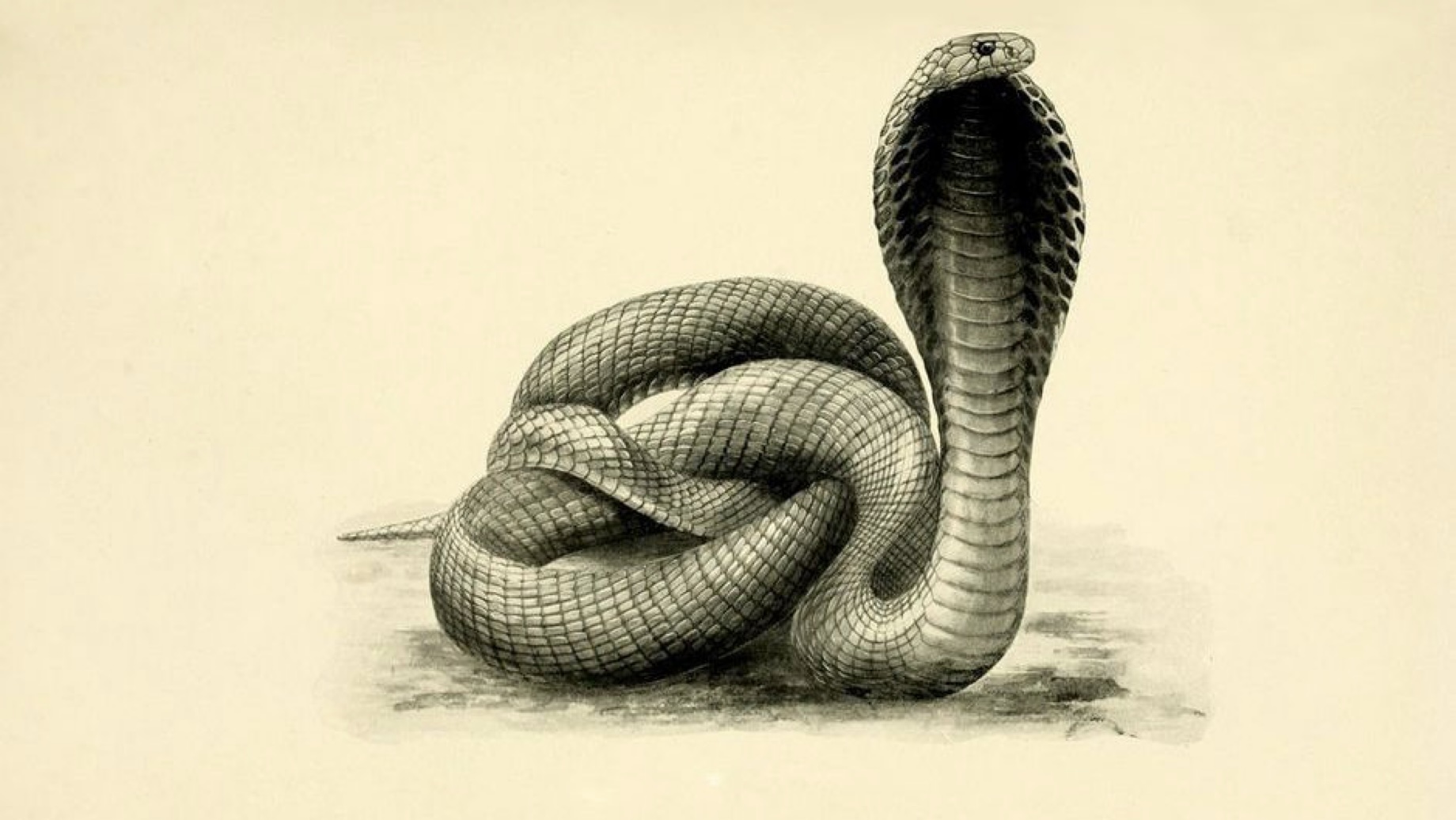

The Cobra Effect

ourworld.unu.edu/en/systems-thinking-and-the-cobra-effect

Photo: Biodiversity Heritage Library. Creative Commons BY 2.0

DEED (cropped).

-

Pay bounty for dead cobras

This comes from the true story of when the British administration in India offered people a bounty to help reduce the cobra population. -

Cobras disappear, but still paying

This worked, but the British noticed they kept paying bounties when they didn’t see any more cobras around. -

People were harvesting cobras

They realized people were raising cobras just to collect the bounty… -

Ended bounty program

…so they ended the bounty program. -

More cobras in streets than before!

People then threw their now useless cobras into the streets, making the problem worse than before. -

Fixes that Fail: Simplistic solution, unforeseen outcomes, worse problem

This is an example of the “Fixes That Fail” archetype. This entails applying an overly simplistic solution to a complex problem, resulting in unforeseen outcomes that eventually make the problem worse.

The Arms Race

Systems thinking should replace brute force in the long run.

In software, I call this “The Arms Race.” This may sound familiar:

-

Investment to create capacity for existing practices and processes…

We invest people, tools, and infrastructure into expanding the capacity of our existing practices and processes. -

Exhaustion of capacity leads to more people, tools, and infrastructure

Things are better for a while, but as the company and its projects and their complexity grow, that capacity’s eventually exhausted. This leads to further investment of people, tools, and infrastructure. -

Then that capacity’s exhausted…

Then eventually that capacity’s exhausted…and the cycle continues. -

AI-generated tests may help people get started in some cases…

There’s a lot of buzz lately about possibly using AI-generated tests to take the automated testing burden off of humans. There may perhaps be room for using AI-generated tests as a starting point. -

…but beware of abdicating professional responsibility to unproven tools.

However, AI can’t read your mind to know all your expectations—all the requirements you’re trying to satisfy or all the assumptions you’re making.6 Even if it could, AI will never absolve you of your professional responsibility to ensure a high quality and trustworthy product.7 -

In the end, we can’t win the arms race against growth and complexity.

We need to realize we can’t win the Arms Race against growth and complexity.

Rogers Report Volume 2: Appendix F

history.nasa.gov/rogersrep/v2appf.htm

Richard Feynman’s final statement on the Challenger disaster is a powerful reminder of our human limitations:8

“For a successful technology, reality must take precedence over public relations, for nature cannot be fooled.”

—Richard Feynman, Personal Observations on Reliability of Shuttle

As professionals, we must resist the Normalization of Deviance, Groupthink, and the Arms Race. We can’t allow them to become, or to remain, Business as Usual.

Coming up next

The next post will cover the next major section, summarizing guiding principles to change mindsets and effect lasting cultural change.

- Building a Software Quality Culture

Footnotes

-

I first learned about this concept from an Apple internal essay on the topic. ↩

-

The full title of chapter six is Chapter VI: An Accident Rooted in History. The data comes from [129-131] Figure 2. O-Ring Anomalies Compared with Joint Temperature and Leak Check Pressure. It lists 25 Space Shuttle launches, ending with STS 51-L. It indicates O-ring anomalies (erosion or blow-by) in 17 of the 24 launches (70%) prior to STS 51-L. In the 17 missions prior, starting with STS 41-B on 1984-02-03, there were 14 anomalies (82%). ↩

-

From Chapter VII: The Silent Safety Program., excerpts from “Trend Data” [155-156]:

As previously noted, the history of problems with the Solid Rocket Booster O-ring took an abrupt turn in January, 1984, when an ominous trend began. Until that date, only one field joint O-ring anomaly had been found during the first nine flights of the Shuttle. Beginning with the tenth mission, however, and concluding with the twenty-fifth, the Challenger flight, more than half of the missions experienced field joint O-ring blow-by or erosion of some kind….

This striking change in performance should have been observed and perhaps traced to a root cause. No such trend analysis was conducted. While flight anomalies involving the O-rings received considerable attention at Morton Thiokol and at Marshall, the significance of the developing trend went unnoticed. The safety, reliability and quality assurance program, of course, exists to ensure that such trends are recognized when they occur….

Not recognizing and reporting this trend can only be described, in NASA terms, as a “quality escape,” a failure of the program to preclude an avoidable problem. If the program had functioned properly, the Challenger accident might have been avoided.

-

The NASA Office of Safety & Mission Assurance site has other interesting artifacts, including:

This latter artifact is a powerfully concise distillation of lessons from the Rogers report. A couple of excerpts:

Pre-Launch

- Launch day temperatures as low as 22°F at Kennedy Space Center.

- Thiokol engineers had concerns about launching due to the effect of low temperature on O-rings.

- NASA Program personnel pressured Thiokol to agree to the launch.

Lessons Learned

- We cannot become complacent.

- We cannot be silent when we see something we feel is unsafe.

- We must allow people to come forward with their concerns without fear of repercussion.

-

If you check out the Wilcutt and Bell presentation, and follow the “Symptoms of Groupthink” Geocities link, do not click on anything on that page. It’s long since been hacked. ↩

-

In Automated Testing—Why Bother?, I define automated testing as: “The practice of writing programs to verify that our code and systems conform to expectations—i.e. that they fulfill requirements and make no incorrect assumptions.” ↩

-

A further thought: Trusting tools like compilers to faithfully translate high-level code to machine code, and to optimize it, is one thing. Compilers are largely deterministic and relatively well understood. AI models are quite another, far more inscrutable, far less trustworthy instrument.

Another thought: In David Marquet’s short talk on “Greatness”, he explains what he calls “the two pillars of giving control:”

- Technical Competence: Is it safe?

- Organizational Clarity: Is it the right thing to do?

Maybe one day we’ll trust AI with the first question. I’m not so sure we’ll ever be able to trust it with the second. ↩

-

Feynman’s entire appendix is worth a read, but here’s another striking passage foreshadowing Wilcutt and Bell’s “lack of bad outcomes” assertion:

There are several references to flights that had gone before. The acceptance and success of these flights is taken as evidence of safety. But erosion and blow-by are not what the design expected. They are warnings that something is wrong. The equipment is not operating as expected, and therefore there is a danger that it can operate with even wider deviations in this unexpected and not thoroughly understood way. The fact that this danger did not lead to a catastrophe before is no guarantee that it will not the next time, unless it is completely understood. When playing Russian roulette the fact that the first shot got off safely is little comfort for the next.