The Test Mercenaries were a team of developers within Google dedicated to helping development teams improve their testing practices and code quality from late 2006 until early 2009. Two or more Mercs would be embedded within a development team full-time for months, using the Testing Grouplet’s Test Certified program as the basis for concrete action and improvement. The team was formed after Bharat Mediratta and Mark Striebeck successfully proposed to Alan Eustace that such a dedicated team be hired to improve development practices and code quality throughout Google via hands-on intervention, and was officially placed within the relatively new Engineering Productivity focus area, alongside Testing Technology, Build Tools, and Test Engineering. Mark Striebeck assumed management of both the Test Mercenaries and Testing Technology, and passed management of the Test Mercenaries on to Brad Green in late 2008.

I was a co-leader of the Testing Grouplet at the time I joined the Mercs in mid-2007, shortly after organizing my second company-wide Testing Fixit, arriving from the Build Tools team. Mark Striebeck sent me to start the New York branch of the team in November 2007. I left the Mercenaries to join the websearch team in January 2009, just a month or so before the entire team was disbanded.

This post goes into much greater detail on some context that I’ve only hinted at or briefly summarized in earlier posts, hence the length. Hopefully the section breaks make it palatable as a multi-session read—just in time for beach getaways, soaking in the sultry summer sun!

Even so, as always, my side of the story isn’t the complete story. There were many Test Mercenaries, and the topics I choose to cover are limited to those with which I had direct experience. Perhaps other Mercs will have much more to say about important aspects I’ve neglected to mention or cover in sufficient depth.

Googlers and ex-Mercs: Fact check-me, and I’ll change what I should.

- Genesis

- Proving Negatives

- In Metrics We Trust

- Conviction

- Modus Operandi

- Cross-pollination

- Expansion

- Man on the Moon

- Revolution

- NYC

- Testing Grouplet NY

- NYC Testing Summit

- Contraction

- Life After Service

- Software Engineers in Test

- Judgment

- Footnotes

Genesis

The origins of the Test Mercenaries are rooted in the Testing Grouplet’s Test Certified program, a concrete roadmap for development teams to improve their testing practices and, consequently, code quality. After TC had signed up a number of early-adopter teams and looked to expand its influence, it became apparent than many teams had at least some members who were open to TC and automated developer testing in general, but who would need some help—both technical and social—in effecting a meaningful, lasting change. In concrete terms, they needed hands-on help to climb the “TC Ladder”.1

The Testing Grouplet leaders at the time—Bharat Mediratta and Nick Lesiecki—plus Mark Striebeck began working on a proposal to create a team of dedicated, full-time Software Engineers (SWEs) that would act as kind of an internal consulting company, with the concrete goal of helping development teams climb the TC Ladder. The working name for this team was “Test Mercenaries”, reflecting the focused-yet-temporary nature of the individual missions the team would embark upon, where members would be on loan to development teams for a limited time. Not a lot of thought was given to the name, but everybody seemed to dig it.

Bharat and Mark had a meeting with Alan Eustace, the Senior Vice President of Engineering, to pitch their idea; but when they proposed starting with only five developers, Alan responded that he had several hundreds (at that time) of project teams, and that they needed to come back with a bigger proposal. Hard to imagine a more encouraging rejection than that! The original proposal was more of a let’s-try-and-see-what-we-can-do suggestion. Alan effectively challenged the Testing Grouplet and its Test Certified program to step up to the software development equivalent of cleaning the Augean stables.2 It was as inspiring a challenge as it was grungy and terrifying.

Shortly afterwards, Bharat, Nick, and Mark held a brainstorming session with Neal Norwitz, Christian Sepulveda of Pivotal Labs, and myself to discuss what kind of big proposal to make, to fit with a big strategy to change all of Alan’s development project teams. Wish I could recall that meeting well enough to tell you the details of what we talked about, but given my memory of how events actually unfolded, the details of that session are probably not relevant.

Eventually, however, Bharat and Mark met with Alan again with a new proposal, this time starting with (I think) ten developers, with plans to aggressively expand. Alan gave the project the green light; Mark, who had been a manager in AdWords, assumed management of the new team, along with Testing Technology, the in-house testing infrastructure team. Neal Norwitz became the first official Test Mercenary in late 2006. Amongst existing full-time Googlers, Jeffrey Yasskin was next, if memory serves, and I joined soon after in mid-2007.

Given the small number of full-time Googlers that were signing up for Mercenary duty, Mark also started hiring contractors from well-known consulting companies. Some of the earliest were Peter Epstein of Pivotal; Sam Newman and Paul Hammant from Thoughtworks; and Keith Ray, Tracy Bialik and Russ Rufer from Industrial Logic.3

Proving Negatives

In an ideal world, a pair of Test Mercenaries would walk into a client team’s office area one sunny morning, sit at desks right next to the rest of the team, and begin offering helpful advice and submitting helpful changes to show everybody how automated developer testing is done. The team would be receptive to and thankful for the help, see a marked improvement in testing practices and code quality, and the Mercs would be on their merry way right on schedule three months later—riding into the sunset of one successful engagement, straight into the sunrise of another. History would repeat itself with precious little variation.

Not so at Google, which is an organization made of real people, not two-dimensional, Stepford-esque caricatures, not happy shadows cast by the sun of a radiant utopian vision. People, by their nature, are largely suspicious of ideas that run counter to their experience and intuition, and of those who espouse them. In contrast to open-minded innovator or early-adopter types, when knee-jerk rebuttals draped in the finery of rational, authoritative knowledge fail to discourage the disruptive element, the closed-minded will exert resistance—sometimes active, sometimes passive—against these different ideas until the momentum of majority action socially isolates and overwhelms them—and/or until they begrudgingly try such an idea and it leads to a positive experience.4

In a slightly-less-than-ideal-but-significantly-better-than-real world, the impact of automated developer testing—and its related tools and practices—could be definitively measured and presented in such a way as to provide overwhelming evidence in favor of its adoption, nipping any “rational” resistance in the bud. However, it’s a bit burdensome to ask developers to track:

- how many bugs they caught in their own code as they were writing it thanks to a unit or integration test they wrote along with the code (whether they actually executed the test before finding the bug or not);

- how many bugs they avoided after redesigning function signatures and class interfaces when unit or integration testing revealed how cumbersome they were to use in a controlled, correct way;

- how many bugs they avoided due to the clarity afforded by splitting one class into two or more classes, to test different behaviors in isolation that were originally welded together;

- how many bugs and build time/complexity issues they avoided by better-specified code dependencies due to such separation;

- how many bugs they avoided due to rethinking library- or system-level interface contracts and architecture decisions thanks to questions raised by automated testing;

- how many bugs they caught after writing a passing test, integrating the latest changes from their teammates, having the test break, conferring with teammates, and fixing the test or the code, leading to a broader, shared understanding of the given feature under test;

- how many bugs they caught when someone from another team submitted a change that legitimately broke a test, requiring either a rollback of the change or a fix to the code;

- how many bugs they avoided when performing a refactoring,5 major or minor, thanks to a thorough collection of automated tests;

- how many bugs they avoided by performing a refactoring to improve the readability, extensibility and maintenance of the code that they wouldn’t’ve dared without a thorough collection of automated tests;

- how many bugs they or their peers didn’t write when changing or extending the code, thanks to clear code and good tests;

- how many bugs they didn’t leave behind for the Test Engineers to find and for someone to fix (thanks to automated tests);

- how many bugs they didn’t leave behind for other developers to find or fix (thanks to automated tests);

- how many bugs their peers didn’t leave behind for them to find or fix (thanks to automated tests);

- how many bugs they didn’t leave behind for themselves to eventually find or fix (thanks to automated tests);

- how many bugs they didn’t leave behind for customers to find (thanks to automated tests); and

- how much time, trust, reputation and revenue they saved by avoiding all of these bugs and issues before even sending their code for review, submitting it to the source control repository, or pushing it to production (thanks to automated tests).

To be fair, testing cannot and will not find 100% of bugs; it can only prove their existence, not their absence, after all. But good testing practice goes a long way towards finding and killing a lot of bugs before they can grow more expensive, and possibly multiply. The bugs that manage to pass through a healthy testing regimen are usually only the really interesting ones. Few things are less worthy of our intellectual prowess than debugging a production crash to find a head-slappingly stupid bug that a straightforward unit or integration test could’ve caught. It’s even less sexy to drown in a sea of such bugs, or to live in perpetual, abject fear of them.

In Metrics We Trust6

Google is a very measurement-driven culture, and in such a culture, concrete measurements provide the incentives towards which people will align and optimize their efforts. The aforementioned productivity-saving/catastrophe-avoidance phenomena being very hard (if even possible) to measure, developers largely neither valued nor optimized for any of these concerns. They valued and optimized for what could be measured, such as:

- writing a lot of code;

- doing a lot of code reviews;

- launching products/features;

- measuring the impact of launched products/features in terms of: page views; active users; queries-per-second; server response latency; user interface latency; machine resources saved or utilized (CPU, RAM, disk, network bandwidth); data processing throughput; quality/impressions/click-throughs (i.e. ad revenue); and

- recovering from catastrophies, user-visible or -invisible.

These metrics are critical for possibly the most valued set of metrics:

- favorable peer reviews;

- favorable manager reviews;

- review scores calibrated between managers; and

- promotions.

These far more measurable phonomena are very important (with the possible exception of worrying too much about reviews and promotions). The challenge was that people back-in-the-day often assumed that the productivity-saving/catastrophe-avoidance phenomena were sufficiently addressed by hiring only “smart” developers, by the deeply-ingrained tradition of code reviews,7 by letting the Test Engineers find all the bugs, and/or by putting out production fires if—If!—they ever happen. These are all indeed important practices, even with a testing culture now firmly in-place; and in fact, history would seem to support the hypothesis that until 2005 or 2006, the strategy of relying largely on these practices alone worked very well for the company. Developer testing was considered of little or no value to—if not in direct opposition to—writing/reviewing code, launching, and getting promoted. In other words, testing was a waste of valuable development time. Hence the popular lament: I don’t have time to test.

Conviction

However, those of us in the Testing Grouplet, the Test Certified program, and Test Mercenaries were convinced that the center could not hold without widespread adoption of automated developer testing. Many of us either had an immersive experience with teams that already had a healthy testing culture, or had been driven to the brink of madness on death march projects, given a harsh dose of the real value of all of those productivity-killing, catastrophic bugs that can only be measured after they appear. I fell into the latter camp, having experienced team-wide despair on a project at Northrop Grumman Mission Systems, despair that I later helped to abolish after experimenting on my own with unit testing, purely on a whim, and having an enormous amount of success with it. For those of us who had experienced the bitter fear and loathing of such projects, and the sweet relief brought about by adding testing to the mix, testing wasn’t the cultish fantasy some accused us of promoting; it was a genuine experience with proven results.

Google, as I mentioned just above, did very well for itself with minimal developer testing for much of its history up until the inception of the Testing Grouplet, Test Certified, and the Test Mercenaries. But we knew that no matter how smart and wonderful Google developers were, or would be in the future, the scale of complexity—of the code; of the infrastructure pieces running in the datacenters; of the products that would continue to be launched, updated, extended and integrated into other products—would reach a point too great for any organization of any size, even with the best developers in the world, to manage without the discipline and security provided by the practice of automated developer testing. Good hiring and code reviews went an extremely long way, and are still critical; but their limits, absent of automated testing and better build and test tools, were being stretched. I tried to illustrate this scenario in an earlier post, Coding and Testing at Google, 2006 vs. 2011.

Modus Operandi

Given the cultural bias against testing on the basis of measurability, especially given the strong incentives towards seeking promotion, we realized our challenges were not purely technical. We needed to spread knowledge, we needed better tools, and we needed to figure out how to navigate extremely complex and diverse team dynamics.

Assessments: Before each engagement, the Mercs who were to engage with a prospective client team would perform an “assessment”, basically a series of meetings with the manager, tech lead(s), and individual team members to get a feel for the team’s existing process and pain points, as well as team-wide and personal goals. And, of course, we were gauging the team’s attitude and dynamics, as best we could, so we could avoid entering into situations where success seemed unlikely. (Unfortunately, this process proved somewhat fallible.) The last step in the assessment was to present to the entire team the status quo as best we understood it, and to reach agreement on specific goals, specific problems that the Mercs and the team wanted to solve during the course of the engagement. Of course, an up-front part of the deal was to get the team on the Test Certified ladder; getting them to TC Level One should be pretty quick, as it was all about putting measurements in place, whereas reaching Levels Two and Three would be the real goal, the real challenge.

Proximate seating: After a few productive and less-than-productive early experiences, we began to demand up-front that the Mercs must be given desks in the immediate physical proximity of the team. While a well-meaning manager or tech lead might petition for Mercenaries to help out the team, if that manager only arranged for the Mercs to sit in a separate location out of sight and earshot of the team, the Mercs’ effectiveness was severely compromised. A big part of the reason Google spoils its developers with cafés and microkitchens is because it encourages an information-sharing, tribe-building dynamic based on physical proximity (however unmeasurable the direct impact). No matter how receptive the team to the Mercs, if the Mercs were kept separate, they just couldn’t integrate themselves into the project and have any kind of significant impact without that physical proximity. When the team was largely apathetic or hostile—exactly the kind of situation the assessments were designed to avoid, but which happened with some frequency anyway—their impact was reduced to pretty much nil, outside of possibly getting a Chris/Jay build and other tools set up to achieve Test Certified Level One.

Two Mercs per engagement: After Neal Norwitz’s early experience as the lone Mercenary on a test-friendly team, the firm policy of at least two Mercenaries per engagement was established. No matter how receptive the client team, going at the job alone was a lonely and tiresome task; having a second to back one up provided vital morale and energy, aided the generation and refinement of ideas, and helped lighten the overall load. Some engagements, depending on the size of the team and complexity of the product, even required three or four Mercenaries.

High-profile projects: Given our limited resources, Mark had to choose our client projects carefully. In particular, while there were many teams and development offices that were interested in Test Mercenary support, he decided the best strategy was to seek out the highest-visibility projects that needed help, focusing exclusively on Mountain View at first, to maximize the visibility of the Mercs and their accomplishments.8 To a large extent, this strategy worked, but it also risked backfiring in those cases where, for one reason or another, the Mercs weren’t able to get their teeth into a project and make a significant difference.

Code reviews: Code reviews are the windows into the soul of a team at Google. They are an archive of the technical details and design decisions of the product as defined by the code, and an archive of the team culture and dynamics as defined by the comments. One can explore the roster of who sends reviews to who, how people choose to communicate their ideas and criticisms, and where the hot spots and pain points of the system appear in reality. For that reason, when I took on an explicit leadership role within the Test Mercenaries in NYC, I coached the Mercs to spend the first couple of weeks of an engagement doing nothing but reading and voluntarily commenting on the team’s code reviews, even if they weren’t included in the review’s to: or cc: field.

Take the bases in order:9 The advice to focus purely on code reviews in the beginning also tied into the principle of not coming on to a team too strong up front. Just like in love, one needs to warm up slowly to the target of one’s interest, so that he/she feels you are genuinely, patiently interested in getting to know him/her rather than desperate to cling to the nearest object as a crutch or a liferaft for one’s own ego. And no team wants you to ride into town like the new sheriff, guns a’ blazin’, laying down the law. Programmers, just like any other humans, are social, emotional, tribal beings that are not purely influenced by rational argument alone, and one must build trust with the tribe before assuming one has been granted permission to influence it.10

In the meanwhile, the first period of lurking on code reviews is also a great time to put the tools in place to get the team to Test Certified Level One; that was an up-front part of the deal, after all, and is pretty straightforward, one-time work that doesn’t require too much of the team’s specific involvement. Once that is done and the level of familiarity and trust has been established, then the Mercs have license to suggest bigger changes, in terms of design, implementation, and testing techniques, in terms of updates to tools or processes or policies—especially the Test Certified Level Two policy of requiring that all nontrivial changes must be accompanied by tests. The most successful engagements led to situations where developers from the team were working very closely with the Mercenaries to make bigger, broader, sweeping changes to the project in terms of either code, tools, process, or all of the above.

Three months: The “ideal” time for each Mercenary engagement was three months. In practice, it turned out to be the “minimal” time. As mentioned above, this process of starting small, building trust, and working towards bigger goals is not a two-week job. A lot of the time, the Mercenaries would stay for longer than three months if they were making significant progress that they didn’t want to break off abruptly, or if they felt there was still hope of improvement on an engagement that hadn’t quite worked out yet. Some engagements worked out so well that the team just wanted to keep collaborating with their Mercs for longer and longer, and if there were no more pressing projects in the pipeline, we let the engagement continue.

Retrospective: After each engagement, the Test Mercenaries would follow-up with a retrospective meeting with the client team. The purpose was to collectively document what worked, what didn’t, what goals were reached, what goals were missed, and what surprises occurred, for better or worse. Some of these retrospectives were very encouraging and inspiring; some of them, quite the opposite. But all were informative; given Mark’s experience with agile retrospectives, as well as the experience that many of the contractors brought, we always walked away from an engagement with a clear sense of the impact we really had, and why.

However, as mentioned above, we had a very hard time pointing to metrics that the majority of the company could value or understand. Yes, we could point to Test Certified progress. Yes, we could say specific behaviors were learned, tools were adopted, design goals were reached. We could even say we did X number of code reviews or submitted X number of changes, if we wanted. But the real value of our impact proved illusive when we did have an impact, and we had precious little to fall back on when it was obvious our impact was minimal or nonexistent. We weren’t primarily responsible for feature or product launches. Production fires were beyond our realm of responsibility. Making features more maintainable or easy to implement and avoiding production fires in the first place were not accomplishments for which we could receive objective credit.

Cross-pollination

In addition to the two-Mercs-per-engagement policy, the Test Mercenaries also had a very active internal mailing list and a weekly meeting in which we would all commisserate about our individual engagements and discuss developments, both technical and social. We would share ideas and, just as importantly, tools between our engagements and client teams this way, both spreading what we each had learned and taking the lessons and information from others back to our individual projects. One fond memory to serve as an example: For months, every sentence uttered by Peter Epstein in these meetings contained the word Guice11 (Probably every sentence outside the meetings, too.)

During following weeks, we’d revisit the same tools and ideas and offer our experiences and refinements, a positive feedback loop that impacted every engagement. Having Mark Striebeck also managing the Testing Technology team was a huge boon in this regard, as he could direct Testing Tech to capitalize on these developments, working in close collaboration with the Build Tools team. Testing on the Toilet proved an ideal outlet for all of the tool development and technical insights resulting from Test Mercenary activity, and the Mercs were able to help keep a steady flow of material through the TotT pipeline. Feedback on the episodes from readers throughout Google also fed back into Mercenary discussions.

In this way, the Test Mercenaries served as a radiant crucible for the most advanced development tools and techniques currently known to the company. When the team was still small, we’d go into a lot of rambling discussions on random tangents where we’d explore ideas deeply; as the team grew much larger, we had to nip that in a bud to give everyone a fair slice of time to hit the highlights. Random ramblings still happened on the mailing list, but I usually found them excessive and tedious in that medium. Great ideas still spread, but something of the camaraderie, at least for me, was lost.

Expansion

Though Test Mercenary membership was open to nearly all interested Software Engineers (SWEs)—there were interested Test Engineers and Software Engineers in Test, but neither were considered as appropriate for the role at that time, as we wanted the client teams to see the Mercs as “fully-equal” SWEs—there were precious few existing Googlers who signed up for the task. Mark Striebeck, being a very well-connected member of the capital-A Agile community at that time, began to aggressively recruit developers from well-known software consulting companies as contractors, i.e. full-time employees that are not given full-time benefits.12 This did provide the huge advantages of bringing fresh perspectives, free of Google baggage, and broad consulting experience to our table, and enabled the team to scale up much more and much faster than it would’ve otherwise. Having a Googler Merc paired up with a newly-recruited contractor also greatly helped the new folks ramp up on Google culture, tools, and standards.

Plus, Mark was able to hire contractors with specific technical experience based on demand, experience that the existing full-time Googlers didn’t necessarily have, such as extensive experience with Java and Javascript. Neal, Jeffrey, Kurt, Noel, and myself were mainly C++ and Python programmers, but many engagements ended up being Java gigs; Miško was the first existing full-timer with extensive Java experience. As demand grew for projects with a large Javascript component, more contractors with Javascript experience were brought in.

The downside for me was that group got so big at one point that, being in NYC at the time and feeling more disconnected from the Mountain View team, I lost track of how many Mercs there were. Let me run through the list of all the Mercs than I can remember (though I know there were many more):

Googlers who transferred from another team:

Mike Bland*

Kevin Cooney

Neal Norwitz

Miško Hevery

Kurt Steinkraus

Jean Tessier*

Jessica Tomechak* (team Tech Writer)

Adam Wildavsky

Noel Yap*

Jeffrey Yasskin

Googlers directly hired as Test Mercenaries:

Dave Astels*

Misha Gridnev

Christian Gruber

Industrial Logic:

Alex Aizikovsky**

Tracy Bialik**

C. Keith Ray

Russ Rufer**

Gene Volovich**

Pivotal:

Adam Abrons

Peter Epstein**

Matt Hargett

Dave Smith

Paul Zabelin

Thoughtworks:

Dennis Byrne

Bradford Cross

Paul Hammant

Ralph Jocham

Jonny LeRoy

Sam Newman

Tim Reaves

Scott Turnquest

Jonathan Wolter

* No longer at Google

**Contractor eventually hired full-time

Apologies to those whose names’ve slipped my mind. Ping me and I’ll update the list. (Thanks to Christian Gruber for helping me fill in a few folks I’d forgotten.)

Man on the Moon

Shortly after joining the team, I grew restless that the Testing Grouplet, Test Certified, and the Test Mercenaries seemed to be out-of-sync, not communicating and collaborating as well as I’d hoped. I was actively involved in all three, but no one else was, really. We all wanted to improve testing at Google, but weren’t terribly clear as to what we were doing to reach that state together, exactly, other than producing Testing on the Toilet episodes, recruiting Test Certified participant teams, and launching a handful of Test Mercenaries engagements.

This increasingly disjoint set of initiatives seemed ripe for unification towards a common goal, lest we duplicate effort or become a set of competing factions, weakening the overall testing mission. After conferring closely with my main partner-in-crime at the time, Intergroups program manager Mamie Rheingold, we realized we needed a Man-on-the-Moon mission: something bold and grand to shoot for that would align everyone’s efforts and produce an enormous impact beyond that which we could possibly predict.

Having grown up in Hampton, VA, where there is more military than any other metropolitan area on the planet, I very naturally took to the military metaphor implied in the “Mercenaries” part of the name, and formulated the first draft of a shared mission thus: The Testing Grouplet and Test Certified should combine forces to make the Test Mercenaries as successful as possible—to “support our troops”, as it were. I liked it; I shopped it around to folks involved in all the groups, and they were just kinda OK with it, except one: my Testing Grouplet co-leader, Michelle Levesque.

One day I proposed that Michelle and I take a walk around the Googleplex to work out our differences over the mission. She didn’t disagree that we needed a mission—no one disagreed on that—but, being Canadian, she thought Americans were too hero-worshipping of the military, and the metaphor of “supporting the Mercenary troops” didn’t sit well with her on that basis. Of course, I was seeing nothing wrong with the implied honor of a military metaphor, but asked what she would propose as an alternative. She said something along the lines of, “Why don’t we make Test Certified the focus of all the groups?”

Bang, that was it! Clear as day, right under our noses! I pounced on this idea immediately, and after we finished our walk, the two of us pulled the third co-lead, Neal Norwitz, away from his desk to run the idea by him. He thought it was a great idea, too, and it was settled—at least as far as the Testing Grouplet was concerned. But it was a very easy sell to the Test Certified (obviously) and Test Mercenaries contingencies as well. So our mission became thus:

Ensure every team at Google reaches Test Certified Level Three by the end of 2009.

Very shortly after getting unanimous buy-in on the new Man-on-the-Moon mission from the Testing Grouplet, Test Certified, and Test Mercenaries, Mark Striebeck, Antoine Picard, and I were invited to a leadership offsite for the nascent Engineering Productivity focus area. The big theme of the offsite was finding a way to have Test Engineers (TEs) and Software Engineers in Test (SETs) engage more effectively with their product teams on issues of overall product quality. The sense at the time was that there was a giant disconnect, that TEs and SETs were basically just cleaning up after the developers without having a say in the development process, without a chance to introduce improvements that would ensure better product quality, to say nothing of making better use of the TEs’ and SETs’ time and talents.

Feeling very excited and confident, I very vocally promoted what I called my “secret agenda”: Getting TEs and SETs to become active advocates of the Testing Grouplet’s Test Certified program for their product teams. Test Certified is primarily concerned with code quality, not overall product quality, but my argument was that issues of product quality were suffering because the TEs’ and SETs’ time was often consumed with diagnosing code quality-related issues—i.e. stupid bugs that a unit test could’ve easily, quickly, and cheaply caught—that were distracting them from doing the more important, more interesting work of ensuring overall product quality. Fixing code quality wouldn’t necessarily fix product quality, but done well, it could only help.

What’s more, Test Certified was already very active and somewhat proven, with dozens of teams already participating, and provided an easy-to-follow package of steps based on de facto-standard internal tools, infrastructure, and policies. This wasn’t something for Eng Prod to have to start from the ground up; they could quickly pick it up and build on the momentum that was already there, and both they and the development teams could see immediate, tangible progress. It gave both the TEs/SETs and their development teams something in common, something absolutely concrete, to discuss and work towards with an eye to improving code quality as it ultimately impacts overall product quality.

After that day, Eng Prod’s endorsement and adoption of the Testing Grouplet’s Test Certified program was rapid and absolute, to the point that Test Certified became more associated with Eng Prod than it did with the Testing Grouplet.13 As a Testing Grouplet leader, I was happy to negotiate a successful symbiosis between the Testing Grouplet and the Eng Prod organization as a whole, but especially with the individual TEs and SETs who also felt an investment in the concept of automated testing, but didn’t fit into the existing developer-exclusive Testing Grouplet and Test Mercenaries cliques.

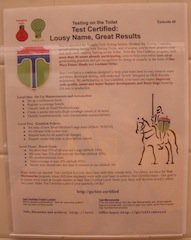

This Testing on the Toilet episode featuring Test Certified, which I happened to write, encapsulates the big picture of everything that was going on with regards to the Testing Grouplet, Test Certified, the Test Mercenaries, Engineering Productivity/Test Engineering, and Testing on the Toilet itself (click on it for the larger, readable image):

This episode also features the Test Certified and Test Mercenaries logos (the TC Shield and the Test Mercs knight, respectively) designed by Mamie Rheingold and the Testing Grouplet lightbulb logo designed by Johannes Henkel (with a small contribution from yours truly). Published in mid-2007, it explicitly mentions the Test Mercenaries, the ability to avoid having bugs slip through to QA and production, and the explicit mission of ensuring all Google teams reach Test Certified Level Three within two years. Apparently we hadn’t yet given up on Ambient Orbs by then. “OKRs” stands for “Objectives and Key Results”, which are the quarterly goals that each individual, team, and focus area, as well as the company as a whole sets at the beginning of each quarter and reviews at the end to gauge progress, impact, and to develop goals for future quarters. Test Certified was designed to be very OKR-friendly.

Revolution

As I mentioned in the Chris/Jay Build post, shortly after the Eng Prod offsite, during the end of July, I experimented with SrcFS and Forge when setting up the Chris/Jay build for my first Mercenary engagement. (Being the first engagement for both Tracy Bialik and I, we didn’t yet think to set up the CJ build before diving into code reviews and some refactoring.) The impact of the new tools was phenomenal, and when I realized the ease with which incompatible tests were fixed, I began planning what would become the Revolution Fixit of January 2008 (rolling out Blaze (aka Bazel), the Make replacement, as well).

Given the length of this post, and the fact that I’ve a lot more context and detail to explain about the Revolution, I’ll just give the punch line here: I saw in the new tools a huge part of the solution to the number one obstacle in our quest to get every Google development team to Test Certified Level Three—the “I don’t have time to test” excuse. I enlisted the rest of the client team to use the new tools, and sold the tools very hard to all my fellow Mercenaries. Mark loved the fixit idea, and he and I worked very closely to make it happen. We did twist the Build Tools team’s arm a bit, as well as that of Ambrose Feinstein, Forge’s author, who was only working on it as a 20% project at the time. I’m not particularly proud to admit that, but in the end, everybody was moving full-speed-ahead in the same direction, and the effect was awesome: Awareness of, interest in, and adoption of the new tools took a quantum leap during the Fixit; the old build tools were mostly dead within three to six months, and totally dead within about a year; and Mark Striebeck formulated his vision for the Test Automation Platform (TAP) based on the power and potential of the new toolchain. Mark assigned Mercenary Sam Newman to lead the initial development effort of Sponge, TAP’s company-wide build-and-test result data collection component, which was integrated into Blaze well in advance of TAP’s rollout.14 Even before TAP rolled out, the “I don’t have time to test” obstacle/excuse was dying, and it was certainly dead afterwards. “Revolution” was the correct name; I’m sure John Lennon would’ve approved, and would’ve loved to see the plan.

The Revolution was one of the quintessential outcomes demonstrating what I mean by the Test Mercenaries serving as a radiant crucible for ideas and tools that spread throughout Google. Again, I promise, I will speak squarely about the Revolution in the near future; with the Test Mercenaries background in place, I’ve got a couple of Testing Fixits to fill in, at which point we’ll be ready for the Revolution.

NYC

By late 2007, Mark Striebeck decided it was time to consider branching the team out to Google’s second-largest development office, New York City. Plus, we landed a huge new client team, with major components in both Mountain View and New York, which actually necessitated a New York presence. Having assumed a leadership role within the Test Mercenaries, and known for my personal love of NYC, Mark chose me to lead the new team. Alex Aizikovski, Jeffrey Yasskin and Tim Reaves were also assigned to the engagement, and Mark sent Alex to accompany me in New York.

I arrived in New York on November 20, 2007. About a week later, while watching Boss Tweed, a rockabilly-blues trio, perform at the Mercury Lounge, I realized that I absolutely wanted to move back to New York, where I had briefly moved during the period of time that I was interviewing for Google. Eventually, ten-and-a-half months later—staying in corporate housing the whole time—official permission was granted and I moved to the West Village.15

Things got off to a very rough start, though: That first, big, highly-visible engagement was an unqualified disaster, for which I assume responsibility. Our daily stand-up meetings and agreements didn’t help. That team, with its strong developers with equally strong personalities, its already-improving discipline and policies, and extremely complex product that the lead developers knew exactly how they wanted to change, was already in a state far beyond our ability to make an impact, or perhaps just beyond my ability for which to lead a successful engagement. However, despite that negative experience, and the grueling retrospective, we managed to salvage good relationships with those developers, and to move on to much more successful engagements. Along the way, Tim Reaves joined us from Mountain View, and Adam Wildavsky, a full-time NYC Googler, also decided to join our team.

Testing Grouplet NY

Despite New York’s status as the second-largest Google development office, the lack of NYC/East Coast Engineering Productivity director at the time made it difficult for the Test Mercenaries, Test Engineers (TEs) and Software Engineers in Test (SETs) to pull together as a community. I had my weekly meetings with Mark Striebeck as a lifeline, but most of the TEs and SETs felt very disconnected from the Mountain View-centric Test Engineering/Engineering Productivity organization. Even the TEs and SETs in Zürich/EMEA had a regional Test Engineering director. I felt some degree of responsibility to do something to help create a sense of community, a sense of connection between those of us in the testing community beyond the few personal friendships some developers had formed.

In early 2008, I conscripted my partner-in-crime David Plass to start a new Testing Grouplet chapter, the Testing Grouplet NY.16 I was never an official leader, but I came to the early meetings to provide ideas and moral support, until David, Prakash Barathan, Catherine Ye, and Tony Aiuto, among others, found their legs and ran with it. That was one of the most fun times to be a Groupleteer, as we all brainstormed New York-centric ideas to promote Test Certified, such as the Statue of Liberty build monitoring orbs and the Testing Grouplet NY logo: The Statue of Liberty holding the green Testing Grouplet logo lightbulb and an Apple PowerBook.

To kick-start the grouplet’s New York-focused efforts to achieve the Test Certified-everywhere mission, I suggested that we try a very New York-specific format for introducing prospective development teams to Test Certified Mentors: Speed dating. But I realized, I didn’t really know how speed dating actually worked. So, in the interest of research, I went speed dating. It was so much fun, and I learned a lot about how to run such an event! After that, we set up the event, with Test Certified mentors at each table and representatives from interested teams rotating around—or was it the other way?—and everybody had a lot of fun and found a match. Mark Striebeck claimed that the best expense report he ever approved was for my speed dating receipt.

NYC Testing Summit

After the DoubleClick integration in April 2008, as a means of galvanizing the members of this now-even-larger New York testing community, I had the idea to organize a NYC Testing Summit, a multi-day, informal, internal conference of TEs, SETs, Test Mercenaries, and test-friendly Software Engineers to take place in the New York office, independently organized from the Engineering Productivity organization in Mountain View (though with help from its budget). I applied Fixit organization tactics to the process, defining explicit roles and handing them out to specific individuals to own, with clear directives and responsibilities and the freedom to execute on them however they chose.

We extended invitations to TEs, SETs, and Mercs company-wide, and we got a good number of folks from Mountain View and other offices to travel to NYC for the event. We had three days of planned presentations and workshops, with all the New York folks mingling with developers and managers from Mountain View, putting faces and handshakes to names. The DoubleClick folks—in particular, Alex Chu, Pavithra Dankanikote, and Dianna Chou—had a blast organizing the event and being a part of the bigger Google Test Engineering family coming together in New York. And we had several kegs of beer from the nearby Chelsea Brewing Company to keep the proceedings lubricated throughout the afternoon.

The summit was held in mid-August 2008. We had no idea how great our timing was.

Contraction

September 2008: The US housing market collapse.

Eric Schmidt and the Google leadership team deserves a ton of credit for sailing the ship through those rough, stormy, unfamiliar waters. To my knowledge, not a single full-time employee was laid off, and most of the perks Google is famous for—cafés, microkitchens, other events and treats—were retained. But the future was extremely uncertain, and Google made one of the most sensible decisions it could have: It decided to let go of nearly all of its contractor employees—and that meant that the Test Mercenaries’ days were effectively numbered.

A number of Test Mercenaries contractors were successfully hired as full-time employees, either immediately or eventually, but many more were just let go. The team as a whole did not shut down right away, but Mark Striebeck did pass managerial duties on to Brad Green shortly thereafter, to focus on Testing Technology and the Test Automation Platform (TAP). In New York, the team became just Adam Wildavsky and me, working on one final engagement together. During my final trip to Mountain View the first week of January 2009, after a few meetings with Brad and other folks, I realized I no longer had anything left to give to the mission, and went back to New York to shut down the NYC Test Mercenaries team. A month or two later, Brad announced that the team was being dismantled completely.

Life After Service

One of the perks of being a Test Mercenary was the opportunity to get hands-on experience across many different Google properties, and to hear about the experiences of others. One could get a good idea of what kind of project one would like to work on after the Mercenaries, and already have familiar contacts in-place. The last engagement Adam and I worked on was for a websearch team, and I grew fascinated with the core product and the culture that had built up around it. What’s more, as a whole, the websearch focus area seemed to take testing really, really seriously; in fact, that last engagement was another where we weren’t sure how much we could help, and I repeatedly told the team’s manager, John Sarapata, that I wasn’t sure we should go through with it. But he was so interested and insistent, and most of the team so receptive, that we went ahead with the engagement and had a great time, even if we couldn’t say that we did all that much. We did give them a helpful push up the Test Certified ladder, though; and the bridge-playing members of the team were in awe of being seated in proximity of the Adam Wildavsky, international bridge championship regular.

When it came time to choose a new team, I gravitated towards websearch. I seriously considered John’s team, and in retrospect, perhaps I should have joined his team instead. But I still got to work closely with John and his team as part of the team I ultimately joined, and I learned a lot about how the Google websearch sausage was actually made. Plus, I always said that, post-Mercs, I wanted to retire into a team that was doing interesting, challenging work, and already had a strong testing culture such that I wouldn’t have to fight anyone over writing automated tests anymore—and that’s what I got.

What I didn’t realize was that the vast majority of teams at Google could be described thus by early 2009, or that I would be called into service once more by Mark Striebeck to lead the Test Automation Platform Fixit in March 2010 to put the final piece of the years-long testing mission puzzle in place.

Software Engineers in Test

Allen Hutchison, an American from the London office who was the Test Engineering director for Europe at the time (and has since moved on to another department), and I had a chat not long after I got involved with the Test Mercenaries. He mentioned the idea for having Test Mercenaries work closely with Software Engineers in Test (SETs), the full-time developers hired to focus exclusively on testing and test infrastructure concerns for a product team, such that the SETs could carry on as the keepers of the testing faith, knowledge and practices for the team long after the Mercs had moved on to another engagement. This sounded like an ideal arrangement to me, but I don’t recall either of us doing that much to actively promote it. The idea eventually eventually manifested itself based on experience, necessity, and maybe a hint of the power of suggestion—especially after the 2008 phynancial crisis resulted in the mass-shedding of contractors, many of whom were manual testers, necessitating a proactive approach on the part of both SETs and development teams.

After I successfully sold Test Engineering on the idea of using the Testing Grouplet’s Test Certified program to drive discussion and adoption of automated developer testing within their client teams, the ranks of Test Certified Mentors flooded with SETs eager to get their projects and their neighbors’ projects on the TC Ladder. In fact, one of the largest testing-related fixits, the months-long 2008 Test Certified Challenge, was organized and executed by two SETs from Cambridge, MA, Matt Vail and Tayeb Karim. Awareness of Test Certified and the number of teams on the TC Ladder exploded during this time, as individual developers, teams, and development offices jockeyed for position on the company-wide TC Challenge leaderboards.

Even before the breakup of the Test Mercenaries, the emphasis of the SET role became increasingly focused on infrastructure development and monitoring/improving testing practices for the whole product team, while being engaged in product and feature development discussions with an eye towards testability. Development skill became more important, as John Turek, the former East Coast Test Engineering director,17 fought fiercely to ensure that any candidate considered for a SET role must be just as technically capable as a normal Software Engineer candidate. No longer would SETs write all the unit tests for the team, or hack together only team-specific testing scripts, or find all the problems after-the-fact for the team to go back and fix; they were about proactively working to ensure code quality up-front as part of the overall product quality mission, and finding ways to adopt and to contribute to Google-wide testing infrastructure and practices. They picked up where the Mercs left off, and then some, since they were seen more as permanent members of a team rather than vagabond missionaries. In fact, several former contractor Mercs were eventually hired as full-time SETs, such as Russ Rufer, Tracy Bialik, Alex Aizikovski, and Gene Volovich.

Judgment

Mark Striebeck has gone on the record as stating that the Test Mercenaries, in the end, were a failure. It was too difficult to “teach” testing in a way that stuck with developers lacking intrinsic motivation; experience is always the best teacher, and the Test Mercenaries usually couldn’t scale the transmission of that experience directly to each member of a team within the scope of a single engagement. We had difficulty defining meaningful metrics to illustrate our impact and difficulty achieving success according to the ones we did define. In some engagements, we had precisely zero impact, as the team proved either too closed-minded as a whole, too motivated to self-medicate without us being able to find a concrete means of adding value to their existing process, or had goals that proved too large and complex for us to effectively contribute to in the time that we had. The approach didn’t scale; when the housing market collapsed, the contractor-heavy enrollment ensured that the team’s days were numbered.

I respectfully disagree with him on the grounds that this assumes a too-narrow, metrics-focused, culturally-biased assessment of “success”. Thanks to the Test Mercenaries, several dozen full-time developers spent years thinking about the hardest technical, social, and organizational obstacles to automated developer testing at Google—not just team-by-team, but in the large. My involvement as both a Test Mercenary and Testing Grouplet leader led me to seek the Test Certified-everywhere mission as the unified focus for both groups (plus the nearly-independent Test Certified organization itself), behind which I then successfully convinced Test Engineering/Engineering Productivity to also throw its support. Other Mercs were inspired to create or adopt new tools that could be applied and shared between some of the highest-profile projects in the company, eventually making the tools sharper and driving their adoption as company-standard infrastructure. Distinct personalities with unique technical and social strengths helped drive a company-wide dialogue around Test Certified, testing terminology and methodology, and tool adoption, producing numerous Testing on the Toilet episodes that shaped how Googlers from Mountain View to Sydney think and talk about developer testing, as well as the Revolution Fixit, which went a long way towards removing the “I don’t have time to test” excuse.

Some client teams may have become Test Certified eventually without us, but certainly for many of them, the Test Mercenaries helped bring about the necessary changes much faster than they would have otherwise. Even if a team didn’t advance very far up the TC Ladder, or risked sliding back down after we left, the experiences informed the company-wide discussions and tool/process developments that, in turn, eventually did produce a permanent culture change in favor of automated developer testing.

The 2008 housing market collapse, as terrible as it was, happened at an opportune time in the sense that the Test Mercenaries, as well as Test Certified, the Testing Grouplet, and Test Engineering/Engineering Productivity in general, had reached a tipping point that only became apparent in 2009, when despite the loss of the Mercenaries and a lot of manual testers, developer testing discipline and the tools used to achieve it continued to steadily improve—at the same time the phynancial crisis underscored the necessity of doing “more with less”—resulting in the resounding successes of the October 2009 Forgeability Fixit and the subsequent March 2010 Test Automation Platform (TAP) Fixit. The concept and implementation of TAP was a direct consequence of the Mercenary-inspired Revolution Fixit, and after TAP rolled out company-wide, the “I don’t have time to test” excuse was completely dead. Consequently, nearly every project team at Google was effectively executing at Test Certified Level Three, whether they were officially recognized on the TC Ladder or not, given that practically every team had at least one TAP integration build, and everyone was bound by the implicit cultural policy of keeping their own and everybody else’s builds passing—and this happened only about one quarter behind the schedule of the stated mission.

The Test Mercenaries may not have succeeded according to accepted, measurable criteria, but it seems highly unlikely to me that the sea change whereby automated developer testing became the expected cultural norm could’ve or would’ve happened without the focused intensity of the Test Mercenaries, augmenting the volunteer activism of the Testing Grouplet and Test Certified programs, filling the Testing on the Toilet pipeline with battle-proven material, pointing the Engineering Productivity organization towards the Test Certified program, helping to shape and spread Testing Technology and Build Tools innovations, and setting the precedent for the fully-engaged Software Engineer in Test role. The immeasurable catastrophes avoided and revenue saved made for a priceless experience.

Footnotes

-

The “TC Ladder” was the published roster of teams participating in the Test Certified program and their corresponding TC levels. ↩

-

The relevant bit from the Wikipedia description: “The fifth Labour of Hercules was to clean the Augean stables. This assignment was intended to be both humiliating (rather than impressive, as had the previous labours) and impossible, since the livestock were divinely healthy (immortal) and therefore produced an enormous quantity of dung. These stables had not been cleaned in over 30 years, and over 1,000 cattle lived there.” Unlike the stables, Google had been around less than ten years. Unlike Hercules, it took us more than a day. ↩

-

Russ and Tracy actually had their own consulting company, Pentad Software Corporation, and were subcontracted via Industrial Logic. Both are now full-time Googlers; some would suggest that they are actually a single Googler, RussAndTracy. ↩

-

Geoffrey Moore’s Crossing the Chasm explains this phenomenon in much more scholarly detail. ↩

-

Refactoring is making changes to the code without changing its function or behavior. This is often done to clean up the code, to make it easier to implement new features, or to make it easier to test. Refactoring a complex system or widely-used library/piece of infrastructure is much more difficult to perform quickly and confidently in the absence of good automated tests. ↩

-

“In God we trust” is the “Don’t be evil” of the United States. ↩

-

The “Given enough eyeballs, all bugs are shallow” philosophy, aka Linus’ Law, which is true up to a point; but adding (good) automated developer tests to the mix has only proven to make code reviews even more valuable, since the code is usually clearer, and the reviewer can largely trust that the author has written the code to pass the stated tests—and can possibly suggest further tests. ↩

-

After my trip to Europe in 2006, when I was planning to join the Mercenaries but hadn’t yet, my partner-in-crime Ana Ulin and I had excitedly dreamed up a plan to have me stationed in Zürich, working with her (a Software Engineer in Test at the time) to build up the Mercenaries in Europe. Mark, despite being German, gently-yet-firmly shot that idea down, which I have to admit was the right decision; the team hadn’t yet formed, and making it multi-site from the get-go before it gained any practical experience was a bad idea. Plus, Zürich is a long way from Mountain View, reducing visibility, no matter how large an office or how important the projects there. With Fixits, however, I repeatedly adopted a non-Mountain View-centric strategy, a kind of flanking maneuver that involved extensive engagement from so-called “remote” offices, that worked very well. ↩

-

For the non-Americans, this is a baseball reference; it’s also a double entendre. My Keeping It Legal days are squarely behind me. Anything I write that could possibly be interpreted in a prurient fashion, should be. ↩

-

Hat tip to Seth Godin for the “tribe/permission” language here. I’m not a Seth Godin fanatic, and I think he gets a little full of his own ideas sometimes, but sometimes he does talk good, plain sense. ↩

-

Guice is now maintained within Google by ex-Merc Christian Gruber. ↩

-

Contractors were identified by the red background behind their names on their Google badges; the technical access-control difficulties they experienced as well as the implied sense second-class status led some of them to refer to the “Red Badge of Shame” (in contrast to the Red Badge of Courage). Some of their gripes, especially the technical ones, were legitimate; sometimes I felt they played the victim card a bit. Understandable to some extent, though; class distinctions in general, regardless of setting, often breed resentment. ↩

-

James Whittaker, who joined Google in 2009, nearly two years after Eng Prod’s involvement with Test Certified began, neglected to credit the Testing Grouplet explicitly as originating the Test Certified program in his book, How Google Tests Software, released at about the time of his return to Microsoft in early 2012. The Testing Grouplet, Testing on the Toilet and Fixits receive passing mention in the interview chapter with Mark Striebeck, Neal Norwitz, Tracy Bialik, and Russ Rufer; the Test Mercenaries are not mentioned in the book at all. ↩

-

Michael Chastain, an extremely well-respected C++ expert within Google, once replied to a request for help on an email thread: “Sponge link or it didn’t happen.” That’s one of the ultimate high forms of validation of Sponge’s impact in my mind. ↩

-

Just moved from the West Village to Brooklyn a few weeks ago; found a place with twice the space for half the rent. ↩

-

David actually wanted it to be known as “Testing Grouplet: The New York Group”, or “TG:TNG”, as a hat tip to “Star Trek: The Next Generation”, but it didn’t seem to catch on as well. ↩

-

The position wasn’t filled, nor was John hired, until after the Test Mercenaries disbanded. ↩